category.json

{

"label": "Get Started",

"position": 1,

"link": {

"type": "generated-index",

"description": "RAGFlow Quick Start"

}

}

configurations.md

---

sidebar_position: 1

slug: /configurations

---

# Configuration

Configurations for deploying RAGFlow via Docker.

## Guidelines

When it comes to system configurations, you will need to manage the following files:

- [.env](https://github.com/infiniflow/ragflow/blob/main/docker/.env): Contains important environment variables for Docker.

- [service_conf.yaml.template](https://github.com/infiniflow/ragflow/blob/main/docker/service_conf.yaml.template): Configures the back-end services. It specifies the system-level configuration for RAGFlow and is used by its API server and task executor. Upon container startup, the `service_conf.yaml` file will be generated based on this template file. This process replaces any environment variables within the template, allowing for dynamic configuration tailored to the container's environment.

- [docker-compose.yml](https://github.com/infiniflow/ragflow/blob/main/docker/docker-compose.yml): The Docker Compose file for starting up the RAGFlow service.

To update the default HTTP serving port (80), go to [docker-compose.yml](https://github.com/infiniflow/ragflow/blob/main/docker/docker-compose.yml) and change `80:80`

to `<YOUR_SERVING_PORT>:80`.

:::tip NOTE

Updates to the above configurations require a reboot of all containers to take effect:

```bash

docker compose -f docker/docker-compose.yml up -d

```

:::

## Docker Compose

- **docker-compose.yml**

Sets up environment for RAGFlow and its dependencies.

- **docker-compose-base.yml**

Sets up environment for RAGFlow's dependencies: Elasticsearch/[Infinity](https://github.com/infiniflow/infinity), MySQL, MinIO, and Redis.

:::danger IMPORTANT

We do not actively maintain **docker-compose-CN-oc9.yml**, **docker-compose-gpu-CN-oc9.yml**, or **docker-compose-gpu.yml**, so use them at your own risk. However, you are welcome to file a pull request to improve any of them.

:::

## Docker environment variables

The [.env](https://github.com/infiniflow/ragflow/blob/main/docker/.env) file contains important environment variables for Docker.

### Elasticsearch

- `STACK_VERSION`

The version of Elasticsearch. Defaults to `8.11.3`

- `ES_PORT`

The port used to expose the Elasticsearch service to the host machine, allowing **external** access to the service running inside the Docker container. Defaults to `1200`.

- `ELASTIC_PASSWORD`

The password for Elasticsearch.

### Kibana

- `KIBANA_PORT`

The port used to expose the Kibana service to the host machine, allowing **external** access to the service running inside the Docker container. Defaults to `6601`.

- `KIBANA_USER`

The username for Kibana. Defaults to `rag_flow`.

- `KIBANA_PASSWORD`

The password for Kibana. Defaults to `infini_rag_flow`.

### Resource management

- `MEM_LIMIT`

The maximum amount of the memory, in bytes, that *a specific* Docker container can use while running. Defaults to `8073741824`.

### MySQL

- `MYSQL_PASSWORD`

The password for MySQL.

- `MYSQL_PORT`

The port used to expose the MySQL service to the host machine, allowing **external** access to the MySQL database running inside the Docker container. Defaults to `5455`.

### MinIO

RAGFlow utilizes MinIO as its object storage solution, leveraging its scalability to store and manage all uploaded files.

- `MINIO_CONSOLE_PORT`

The port used to expose the MinIO console interface to the host machine, allowing **external** access to the web-based console running inside the Docker container. Defaults to `9001`

- `MINIO_PORT`

The port used to expose the MinIO API service to the host machine, allowing **external** access to the MinIO object storage service running inside the Docker container. Defaults to `9000`.

- `MINIO_USER`

The username for MinIO.

- `MINIO_PASSWORD`

The password for MinIO.

### Redis

- `REDIS_PORT`

The port used to expose the Redis service to the host machine, allowing **external** access to the Redis service running inside the Docker container. Defaults to `6379`.

- `REDIS_PASSWORD`

The password for Redis.

### RAGFlow

- `SVR_HTTP_PORT`

The port used to expose RAGFlow's HTTP API service to the host machine, allowing **external** access to the service running inside the Docker container. Defaults to `9380`.

- `RAGFLOW-IMAGE`

The Docker image edition. Available editions:

- `infiniflow/ragflow:v0.18.0-slim` (default): The RAGFlow Docker image without embedding models.

- `infiniflow/ragflow:v0.18.0`: The RAGFlow Docker image with embedding models including:

- Built-in embedding models:

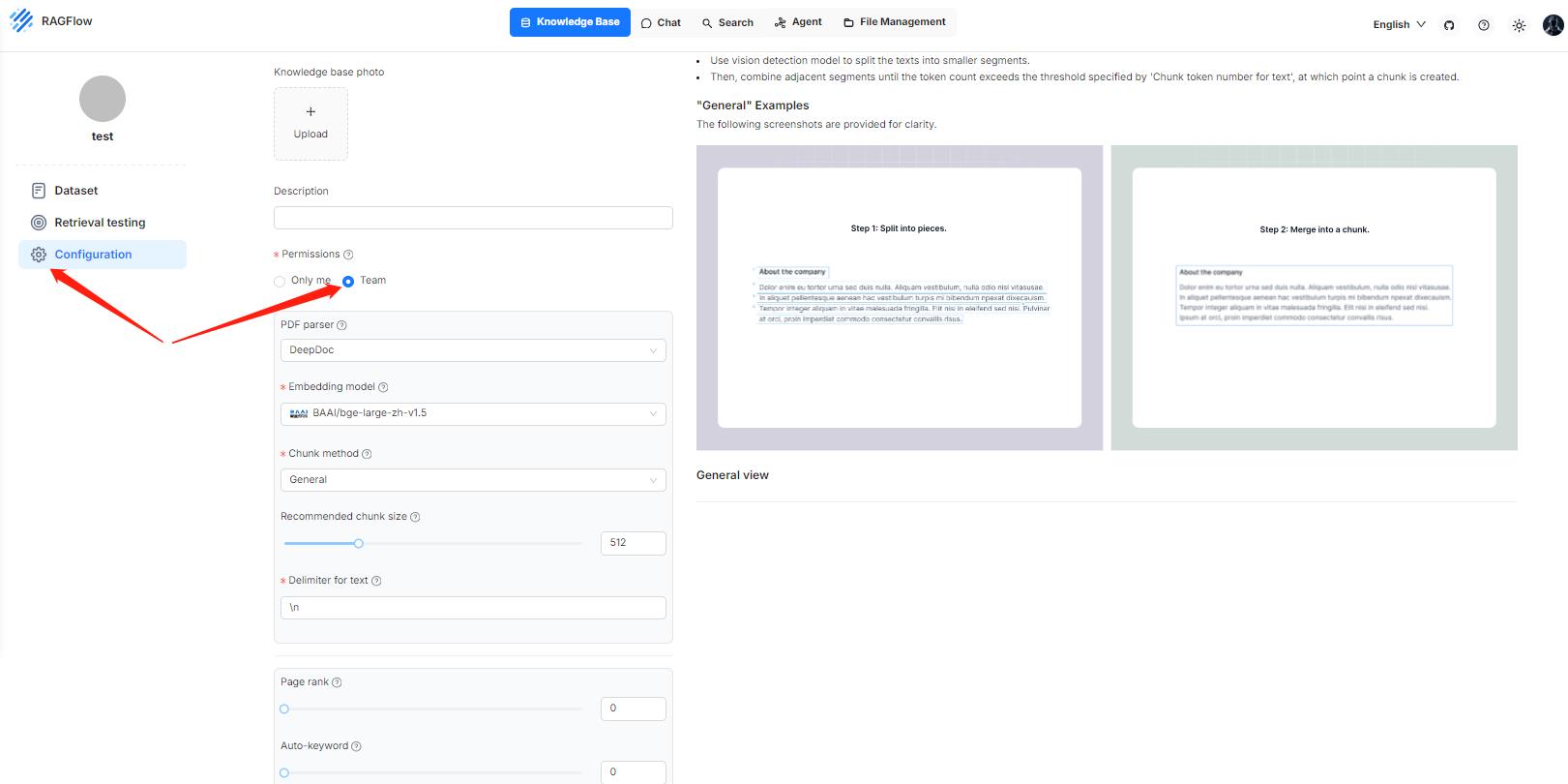

- `BAAI/bge-large-zh-v1.5`

- `maidalun1020/bce-embedding-base_v1`

:::tip NOTE

If you cannot download the RAGFlow Docker image, try the following mirrors.

- For the `nightly-slim` edition:

- `RAGFLOW_IMAGE=swr.cn-north-4.myhuaweicloud.com/infiniflow/ragflow:nightly-slim` or,

- `RAGFLOW_IMAGE=registry.cn-hangzhou.aliyuncs.com/infiniflow/ragflow:nightly-slim`.

- For the `nightly` edition:

- `RAGFLOW_IMAGE=swr.cn-north-4.myhuaweicloud.com/infiniflow/ragflow:nightly` or,

- `RAGFLOW_IMAGE=registry.cn-hangzhou.aliyuncs.com/infiniflow/ragflow:nightly`.

:::

### Timezone

- `TIMEZONE`

The local time zone. Defaults to `'Asia/Shanghai'`.

### Hugging Face mirror site

- `HF_ENDPOINT`

The mirror site for huggingface.co. It is disabled by default. You can uncomment this line if you have limited access to the primary Hugging Face domain.

### MacOS

- `MACOS`

Optimizations for macOS. It is disabled by default. You can uncomment this line if your OS is macOS.

### User registration

- `REGISTER_ENABLED`

- `1`: (Default) Enable user registration.

- `0`: Disable user registration.

## Service configuration

[service_conf.yaml.template](https://github.com/infiniflow/ragflow/blob/main/docker/service_conf.yaml.template) specifies the system-level configuration for RAGFlow and is used by its API server and task executor.

### `ragflow`

- `host`: The API server's IP address inside the Docker container. Defaults to `0.0.0.0`.

- `port`: The API server's serving port inside the Docker container. Defaults to `9380`.

### `mysql`

- `name`: The MySQL database name. Defaults to `rag_flow`.

- `user`: The username for MySQL.

- `password`: The password for MySQL.

- `port`: The MySQL serving port inside the Docker container. Defaults to `3306`.

- `max_connections`: The maximum number of concurrent connections to the MySQL database. Defaults to `100`.

- `stale_timeout`: Timeout in seconds.

### `minio`

- `user`: The username for MinIO.

- `password`: The password for MinIO.

- `host`: The MinIO serving IP *and* port inside the Docker container. Defaults to `minio:9000`.

### `oauth`

The OAuth configuration for signing up or signing in to RAGFlow using a third-party account. It is disabled by default. To enable this feature, uncomment the corresponding lines in **service_conf.yaml.template**.

- `github`: The GitHub authentication settings for your application. Visit the [GitHub Developer Settings](https://github.com/settings/developers) page to obtain your client_id and secret_key.

#### OAuth/OIDC

RAGFlow supports OAuth/OIDC authentication through the following routes:

- `/login/<channel>`: Initiates the OAuth flow for the specified channel

- `/oauth/callback/<channel>`: Handles the OAuth callback after successful authentication

The callback URL should be configured in your OAuth provider as:

```

https://your-app.com/oauth/callback/<channel>

```

For detailed instructions on configuring **service_conf.yaml.template**, please refer to [Usage](https://github.com/infiniflow/ragflow/blob/main/api/apps/auth/README.md#usage).

### `user_default_llm`

The default LLM to use for a new RAGFlow user. It is disabled by default. To enable this feature, uncomment the corresponding lines in **service_conf.yaml.template**.

- `factory`: The LLM supplier. Available options:

- `"OpenAI"`

- `"DeepSeek"`

- `"Moonshot"`

- `"Tongyi-Qianwen"`

- `"VolcEngine"`

- `"ZHIPU-AI"`

- `api_key`: The API key for the specified LLM. You will need to apply for your model API key online.

:::tip NOTE

If you do not set the default LLM here, configure the default LLM on the **Settings** page in the RAGFlow UI.

:::

faq.mdx

---

sidebar_position: 10

slug: /faq

---

# FAQs

Answers to questions about general features, troubleshooting, usage, and more.

---

import TOCInline from '@theme/TOCInline';

<TOCInline toc={toc} />

## General features

---

### What sets RAGFlow apart from other RAG products?

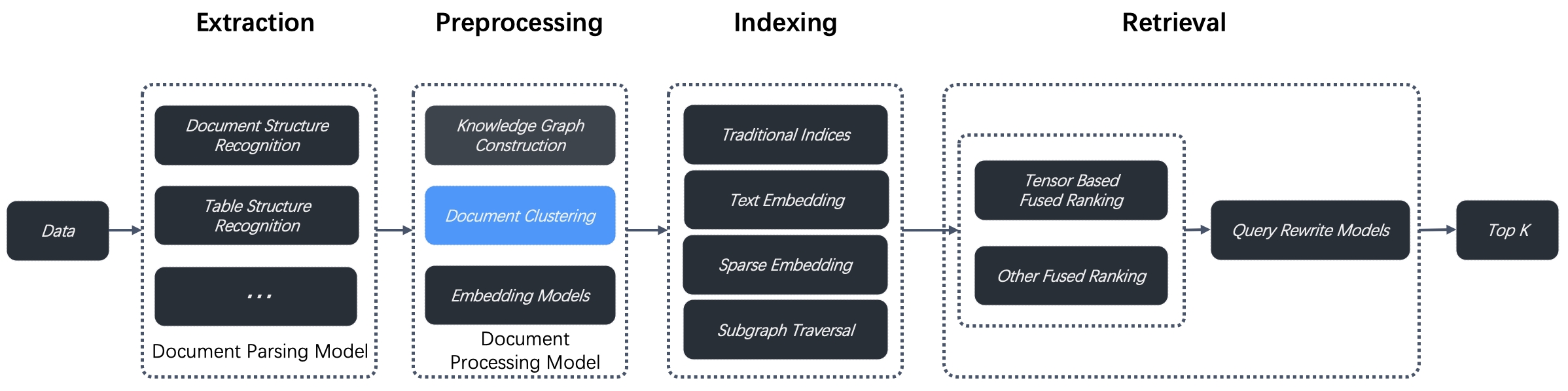

The "garbage in garbage out" status quo remains unchanged despite the fact that LLMs have advanced Natural Language Processing (NLP) significantly. In response, RAGFlow introduces two unique features compared to other Retrieval-Augmented Generation (RAG) products.

- Fine-grained document parsing: Document parsing involves images and tables, with the flexibility for you to intervene as needed.

- Traceable answers with reduced hallucinations: You can trust RAGFlow's responses as you can view the citations and references supporting them.

---

### Differences between RAGFlow full edition and RAGFlow slim edition?

Each RAGFlow release is available in two editions:

- **Slim edition**: excludes built-in embedding models and is identified by a **-slim** suffix added to the version name. Example: `infiniflow/ragflow:v0.18.0-slim`

- **Full edition**: includes built-in embedding models and has no suffix added to the version name. Example: `infiniflow/ragflow:v0.18.0`

---

### Which embedding models can be deployed locally?

RAGFlow offers two Docker image editions, `v0.18.0-slim` and `v0.18.0`:

- `infiniflow/ragflow:v0.18.0-slim` (default): The RAGFlow Docker image without embedding models.

- `infiniflow/ragflow:v0.18.0`: The RAGFlow Docker image with embedding models including:

- Built-in embedding models:

- `BAAI/bge-large-zh-v1.5`

- `maidalun1020/bce-embedding-base_v1`

- Embedding models that will be downloaded once you select them in the RAGFlow UI:

- `BAAI/bge-base-en-v1.5`

- `BAAI/bge-large-en-v1.5`

- `BAAI/bge-small-en-v1.5`

- `BAAI/bge-small-zh-v1.5`

- `jinaai/jina-embeddings-v2-base-en`

- `jinaai/jina-embeddings-v2-small-en`

- `nomic-ai/nomic-embed-text-v1.5`

- `sentence-transformers/all-MiniLM-L6-v2`

---

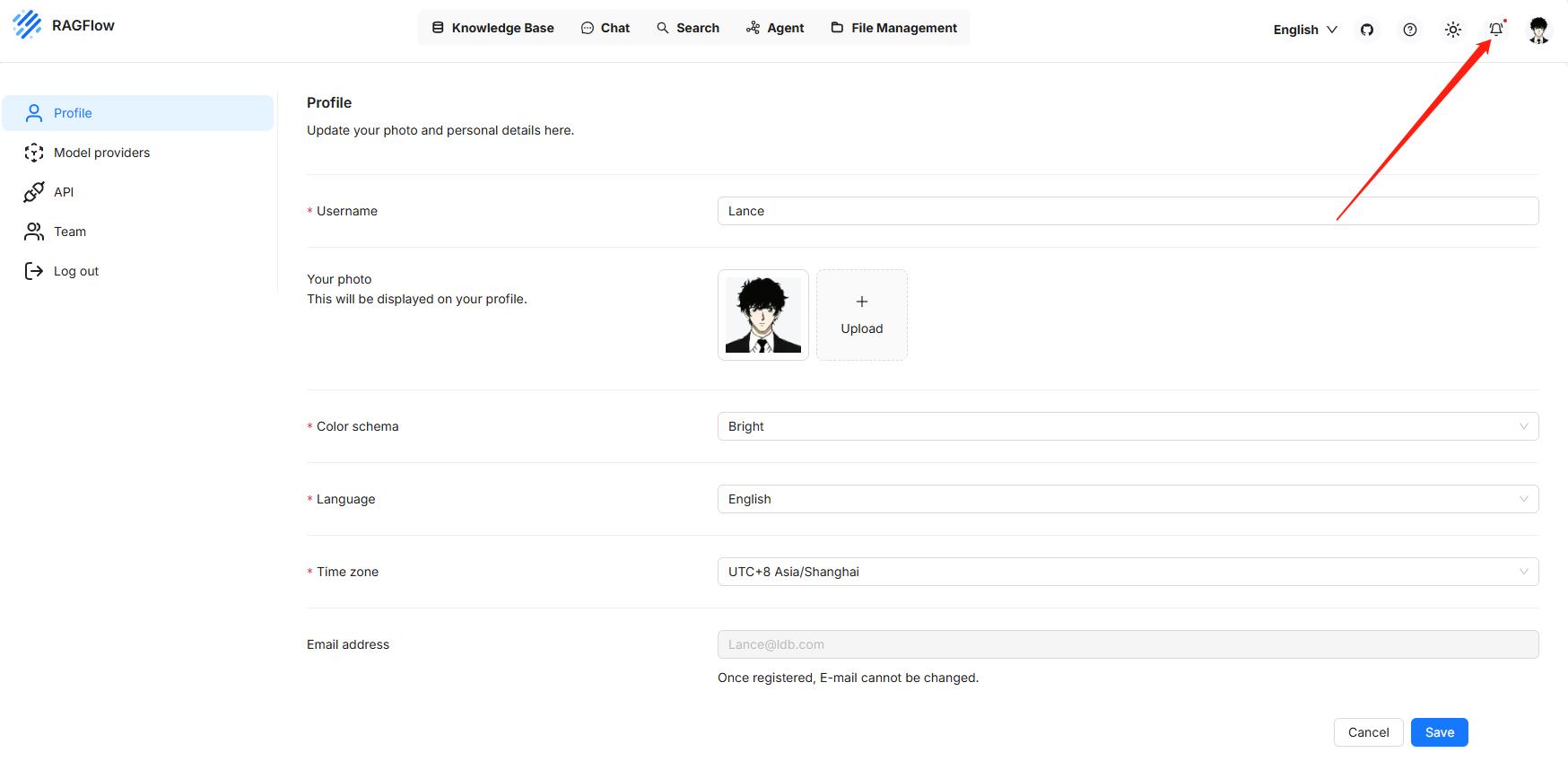

### Where to find the version of RAGFlow? How to interpret it?

You can find the RAGFlow version number on the **System** page of the UI:

If you build RAGFlow from source, the version number is also in the system log:

```

____ ___ ______ ______ __

/ __ \ / | / ____// ____// /____ _ __

/ /_/ // /| | / / __ / /_ / // __ \| | /| / /

/ _, _// ___ |/ /_/ // __/ / // /_/ /| |/ |/ /

/_/ |_|/_/ |_|\____//_/ /_/ \____/ |__/|__/

2025-02-18 10:10:43,835 INFO 1445658 RAGFlow version: v0.15.0-50-g6daae7f2 full

```

Where:

- `v0.15.0`: The officially published release.

- `50`: The number of git commits since the official release.

- `g6daae7f2`: `g` is the prefix, and `6daae7f2` is the first seven characters of the current commit ID.

- `full`/`slim`: The RAGFlow edition.

- `full`: The full RAGFlow edition.

- `slim`: The RAGFlow edition without embedding models and Python packages.

---

### Differences between demo.ragflow.io and a locally deployed open-source RAGFlow service?

demo.ragflow.io demonstrates the capabilities of RAGFlow Enterprise. Its DeepDoc models are pre-trained using proprietary data and it offers much more sophisticated team permission controls. Essentially, demo.ragflow.io serves as a preview of RAGFlow's forthcoming SaaS (Software as a Service) offering.

You can deploy an open-source RAGFlow service and call it from a Python client or through RESTful APIs. However, this is not supported on demo.ragflow.io.

---

### Why does it take longer for RAGFlow to parse a document than LangChain?

We put painstaking effort into document pre-processing tasks like layout analysis, table structure recognition, and OCR (Optical Character Recognition) using our vision models. This contributes to the additional time required.

---

### Why does RAGFlow require more resources than other projects?

RAGFlow has a number of built-in models for document structure parsing, which account for the additional computational resources.

---

### Which architectures or devices does RAGFlow support?

We officially support x86 CPU and nvidia GPU. While we also test RAGFlow on ARM64 platforms, we do not maintain RAGFlow Docker images for ARM. If you are on an ARM platform, follow [this guide](./develop/build_docker_image.mdx) to build a RAGFlow Docker image.

---

### Do you offer an API for integration with third-party applications?

The corresponding APIs are now available. See the [RAGFlow HTTP API Reference](./references/http_api_reference.md) or the [RAGFlow Python API Reference](./references/python_api_reference.md) for more information.

---

### Do you support stream output?

Yes, we do.

---

### Do you support sharing dialogue through URL?

No, this feature is not supported.

---

### Do you support multiple rounds of dialogues, referencing previous dialogues as context for the current query?

Yes, we support enhancing user queries based on existing context of an ongoing conversation:

1. On the **Chat** page, hover over the desired assistant and select **Edit**.

2. In the **Chat Configuration** popup, click the **Prompt engine** tab.

3. Switch on **Multi-turn optimization** to enable this feature.

---

### Key differences between AI search and chat?

- **AI search**: This is a single-turn AI conversation using a predefined retrieval strategy (a hybrid search of weighted keyword similarity and weighted vector similarity) and the system's default chat model. It does not involve advanced RAG strategies like knowledge graph, auto-keyword, or auto-question. Retrieved chunks will be listed below the chat model's response.

- **AI chat**: This is a multi-turn AI conversation where you can define your retrieval strategy (a weighted reranking score can be used to replace the weighted vector similarity in a hybrid search) and choose your chat model. In an AI chat, you can configure advanced RAG strategies, such as knowledge graphs, auto-keyword, and auto-question, for your specific case. Retrieved chunks are not displayed along with the answer.

When debugging your chat assistant, you can use AI search as a reference to verify your model settings and retrieval strategy.

---

## Troubleshooting

---

### How to build the RAGFlow image from scratch?

See [Build a RAGFlow Docker image](./develop/build_docker_image.mdx).

### Cannot access https://huggingface.co

A locally deployed RAGflow downloads OCR and embedding modules from [Huggingface website](https://huggingface.co) by default. If your machine is unable to access this site, the following error occurs and PDF parsing fails:

```

FileNotFoundError: [Errno 2] No such file or directory: '/root/.cache/huggingface/hub/models--InfiniFlow--deepdoc/snapshots/be0c1e50eef6047b412d1800aa89aba4d275f997/ocr.res'

```

To fix this issue, use https://hf-mirror.com instead:

1. Stop all containers and remove all related resources:

```bash

cd ragflow/docker/

docker compose down

```

2. Uncomment the following line in **ragflow/docker/.env**:

```

# HF_ENDPOINT=https://hf-mirror.com

```

3. Start up the server:

```bash

docker compose up -d

```

---

### `MaxRetryError: HTTPSConnectionPool(host='hf-mirror.com', port=443)`

This error suggests that you do not have Internet access or are unable to connect to hf-mirror.com. Try the following:

1. Manually download the resource files from [huggingface.co/InfiniFlow/deepdoc](https://huggingface.co/InfiniFlow/deepdoc) to your local folder **~/deepdoc**.

2. Add a volumes to **docker-compose.yml**, for example:

```

- ~/deepdoc:/ragflow/rag/res/deepdoc

```

---

### `WARNING: can't find /raglof/rag/res/borker.tm`

Ignore this warning and continue. All system warnings can be ignored.

---

### `network anomaly There is an abnormality in your network and you cannot connect to the server.`

You will not log in to RAGFlow unless the server is fully initialized. Run `docker logs -f ragflow-server`.

*The server is successfully initialized, if your system displays the following:*

```

____ ___ ______ ______ __

/ __ \ / | / ____// ____// /____ _ __

/ /_/ // /| | / / __ / /_ / // __ \| | /| / /

/ _, _// ___ |/ /_/ // __/ / // /_/ /| |/ |/ /

/_/ |_|/_/ |_|\____//_/ /_/ \____/ |__/|__/

* Running on all addresses (0.0.0.0)

* Running on http://127.0.0.1:9380

* Running on http://x.x.x.x:9380

INFO:werkzeug:Press CTRL+C to quit

```

---

### `Realtime synonym is disabled, since no redis connection`

Ignore this warning and continue. All system warnings can be ignored.

---

### Why does my document parsing stall at under one percent?

Click the red cross beside the 'parsing status' bar, then restart the parsing process to see if the issue remains. If the issue persists and your RAGFlow is deployed locally, try the following:

1. Check the log of your RAGFlow server to see if it is running properly:

```bash

docker logs -f ragflow-server

```

2. Check if the **task_executor.py** process exists.

3. Check if your RAGFlow server can access hf-mirror.com or huggingface.com.

---

### Why does my pdf parsing stall near completion, while the log does not show any error?

Click the red cross beside the 'parsing status' bar, then restart the parsing process to see if the issue remains. If the issue persists and your RAGFlow is deployed locally, the parsing process is likely killed due to insufficient RAM. Try increasing your memory allocation by increasing the `MEM_LIMIT` value in **docker/.env**.

:::note

Ensure that you restart up your RAGFlow server for your changes to take effect!

```bash

docker compose stop

```

```bash

docker compose up -d

```

:::

---

### `Index failure`

An index failure usually indicates an unavailable Elasticsearch service.

---

### How to check the log of RAGFlow?

```bash

tail -f ragflow/docker/ragflow-logs/*.log

```

---

### How to check the status of each component in RAGFlow?

1. Check the status of the Elasticsearch Docker container:

```bash

$ docker ps

```

*The following is an example result:*

```bash

5bc45806b680 infiniflow/ragflow:latest "./entrypoint.sh" 11 hours ago Up 11 hours 0.0.0.0:80->80/tcp, :::80->80/tcp, 0.0.0.0:443->443/tcp, :::443->443/tcp, 0.0.0.0:9380->9380/tcp, :::9380->9380/tcp ragflow-server

91220e3285dd docker.elastic.co/elasticsearch/elasticsearch:8.11.3 "/bin/tini -- /usr/l…" 11 hours ago Up 11 hours (healthy) 9300/tcp, 0.0.0.0:9200->9200/tcp, :::9200->9200/tcp ragflow-es-01

d8c86f06c56b mysql:5.7.18 "docker-entrypoint.s…" 7 days ago Up 16 seconds (healthy) 0.0.0.0:3306->3306/tcp, :::3306->3306/tcp ragflow-mysql

cd29bcb254bc quay.io/minio/minio:RELEASE.2023-12-20T01-00-02Z "/usr/bin/docker-ent…" 2 weeks ago Up 11 hours 0.0.0.0:9001->9001/tcp, :::9001->9001/tcp, 0.0.0.0:9000->9000/tcp, :::9000->9000/tcp ragflow-minio

```

2. Follow [this document](./guides/run_health_check.md) to check the health status of the Elasticsearch service.

:::danger IMPORTANT

The status of a Docker container status does not necessarily reflect the status of the service. You may find that your services are unhealthy even when the corresponding Docker containers are up running. Possible reasons for this include network failures, incorrect port numbers, or DNS issues.

:::

---

### `Exception: Can't connect to ES cluster`

1. Check the status of the Elasticsearch Docker container:

```bash

$ docker ps

```

*The status of a healthy Elasticsearch component should look as follows:*

```

91220e3285dd docker.elastic.co/elasticsearch/elasticsearch:8.11.3 "/bin/tini -- /usr/l…" 11 hours ago Up 11 hours (healthy) 9300/tcp, 0.0.0.0:9200->9200/tcp, :::9200->9200/tcp ragflow-es-01

```

2. Follow [this document](./guides/run_health_check.md) to check the health status of the Elasticsearch service.

:::danger IMPORTANT

The status of a Docker container status does not necessarily reflect the status of the service. You may find that your services are unhealthy even when the corresponding Docker containers are up running. Possible reasons for this include network failures, incorrect port numbers, or DNS issues.

:::

3. If your container keeps restarting, ensure `vm.max_map_count` >= 262144 as per [this README](https://github.com/infiniflow/ragflow?tab=readme-ov-file#-start-up-the-server). Updating the `vm.max_map_count` value in **/etc/sysctl.conf** is required, if you wish to keep your change permanent. Note that this configuration works only for Linux.

---

### Can't start ES container and get `Elasticsearch did not exit normally`

This is because you forgot to update the `vm.max_map_count` value in **/etc/sysctl.conf** and your change to this value was reset after a system reboot.

---

### `{"data":null,"code":100,"message":"<NotFound '404: Not Found'>"}`

Your IP address or port number may be incorrect. If you are using the default configurations, enter `http://<IP_OF_YOUR_MACHINE>` (**NOT 9380, AND NO PORT NUMBER REQUIRED!**) in your browser. This should work.

---

### `Ollama - Mistral instance running at 127.0.0.1:11434 but cannot add Ollama as model in RagFlow`

A correct Ollama IP address and port is crucial to adding models to Ollama:

- If you are on demo.ragflow.io, ensure that the server hosting Ollama has a publicly accessible IP address. Note that 127.0.0.1 is not a publicly accessible IP address.

- If you deploy RAGFlow locally, ensure that Ollama and RAGFlow are in the same LAN and can communicate with each other.

See [Deploy a local LLM](./guides/models/deploy_local_llm.mdx) for more information.

---

### Do you offer examples of using DeepDoc to parse PDF or other files?

Yes, we do. See the Python files under the **rag/app** folder.

---

### `FileNotFoundError: [Errno 2] No such file or directory`

1. Check the status of the MinIO Docker container:

```bash

$ docker ps

```

*The status of a healthy Elasticsearch component should look as follows:*

```bash

cd29bcb254bc quay.io/minio/minio:RELEASE.2023-12-20T01-00-02Z "/usr/bin/docker-ent…" 2 weeks ago Up 11 hours 0.0.0.0:9001->9001/tcp, :::9001->9001/tcp, 0.0.0.0:9000->9000/tcp, :::9000->9000/tcp ragflow-minio

```

2. Follow [this document](./guides/run_health_check.md) to check the health status of the Elasticsearch service.

:::danger IMPORTANT

The status of a Docker container status does not necessarily reflect the status of the service. You may find that your services are unhealthy even when the corresponding Docker containers are up running. Possible reasons for this include network failures, incorrect port numbers, or DNS issues.

:::

---

## Usage

---

### How to run RAGFlow with a locally deployed LLM?

You can use Ollama or Xinference to deploy local LLM. See [here](./guides/models/deploy_local_llm.mdx) for more information.

---

### How to add an LLM that is not supported?

If your model is not currently supported but has APIs compatible with those of OpenAI, click **OpenAI-API-Compatible** on the **Model providers** page to configure your model:

---

### How to integrate RAGFlow with Ollama?

- If RAGFlow is locally deployed, ensure that your RAGFlow and Ollama are in the same LAN.

- If you are using our online demo, ensure that the IP address of your Ollama server is public and accessible.

See [here](./guides/models/deploy_local_llm.mdx) for more information.

---

### How to change the file size limit?

For a locally deployed RAGFlow: the total file size limit per upload is 1GB, with a batch upload limit of 32 files. There is no cap on the total number of files per account. To update this 1GB file size limit:

- In **docker/.env**, upcomment `# MAX_CONTENT_LENGTH=1073741824`, adjust the value as needed, and note that `1073741824` represents 1GB in bytes.

- If you update the value of `MAX_CONTENT_LENGTH` in **docker/.env**, ensure that you update `client_max_body_size` in **nginx/nginx.conf** accordingly.

:::tip NOTE

It is not recommended to manually change the 32-file batch upload limit. However, if you use RAGFlow's HTTP API or Python SDK to upload files, the 32-file batch upload limit is automatically removed.

:::

---

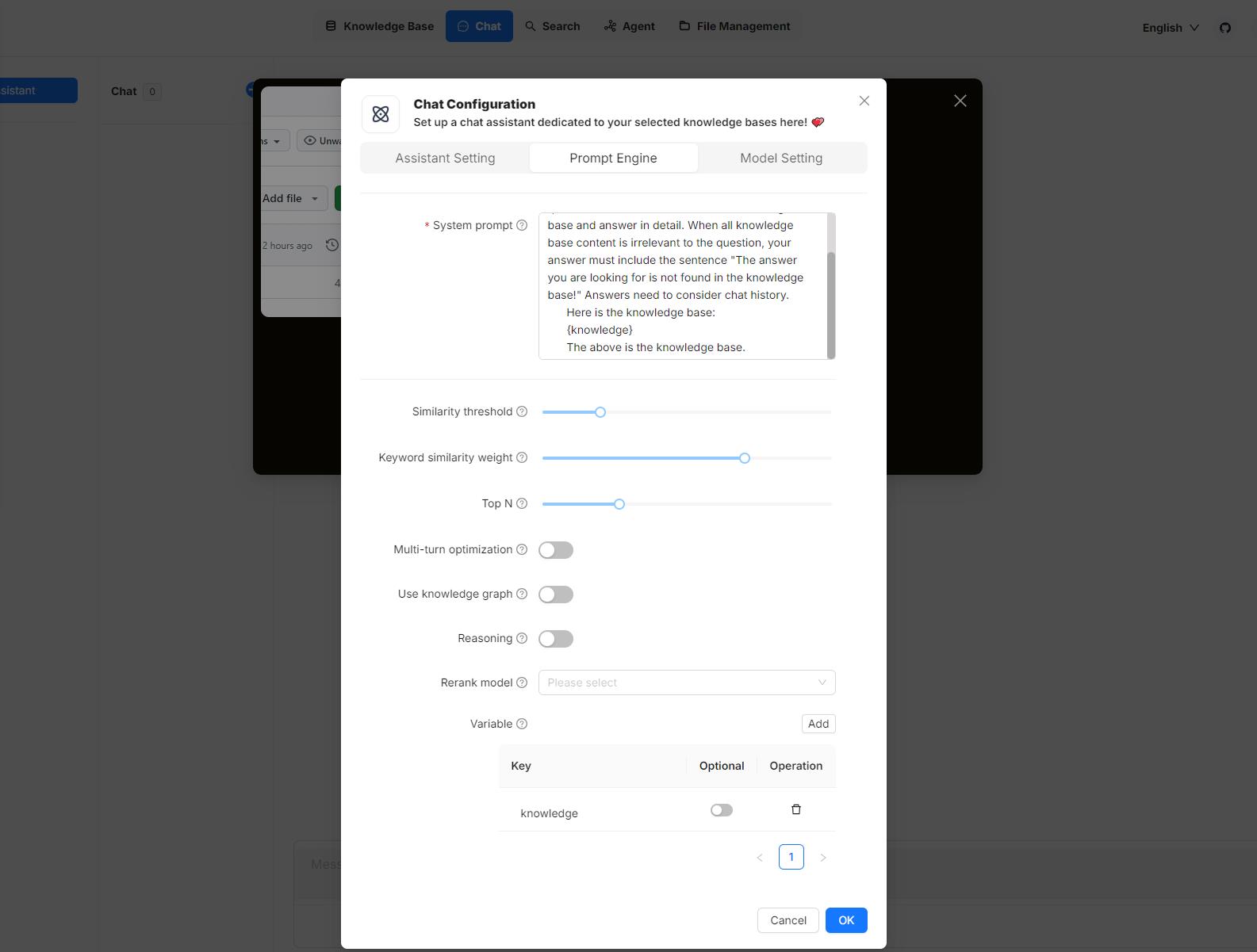

### `Error: Range of input length should be [1, 30000]`

This error occurs because there are too many chunks matching your search criteria. Try reducing the **TopN** and increasing **Similarity threshold** to fix this issue:

1. Click **Chat** in the middle top of the page.

2. Right-click the desired conversation > **Edit** > **Prompt engine**

3. Reduce the **TopN** and/or raise **Similarity threshold**.

4. Click **OK** to confirm your changes.

---

### How to get an API key for integration with third-party applications?

See [Acquire a RAGFlow API key](./develop/acquire_ragflow_api_key.md).

---

### How to upgrade RAGFlow?

See [Upgrade RAGFlow](./guides/upgrade_ragflow.mdx) for more information.

---

### How to switch the document engine to Infinity?

To switch your document engine from Elasticsearch to [Infinity](https://github.com/infiniflow/infinity):

1. Stop all running containers:

```bash

$ docker compose -f docker/docker-compose.yml down -v

```

:::caution WARNING

`-v` will delete all Docker container volumes, and the existing data will be cleared.

:::

2. In **docker/.env**, set `DOC_ENGINE=${DOC_ENGINE:-infinity}`

3. Restart your Docker image:

```bash

$ docker compose -f docker-compose.yml up -d

```

---

### Where are my uploaded files stored in RAGFlow's image?

All uploaded files are stored in Minio, RAGFlow's object storage solution. For instance, if you upload your file directly to a knowledge base, it is located at `<knowledgebase_id>/filename`.

---

release_notes.md

---

sidebar_position: 2

slug: /release_notes

---

# Releases

Key features, improvements and bug fixes in the latest releases.

:::info

Each RAGFlow release is available in two editions:

- **Slim edition**: excludes built-in embedding models and is identified by a **-slim** suffix added to the version name. Example: `infiniflow/ragflow:v0.18.0-slim`

- **Full edition**: includes built-in embedding models and has no suffix added to the version name. Example: `infiniflow/ragflow:v0.18.0`

:::

## v0.18.0

Released on April 23, 2025.

### Compatibility changes

From this release onwards, built-in rerank models have been removed because they have minimal impact on retrieval rates but significantly increase retrieval time.

### New features

- MCP server: enables access to RAGFlow's knowledge bases via MCP.

- DeepDoc supports adopting VLM model as a processing pipeline during document layout recognition, enabling in-depth analysis of images in PDF and DOCX files.

- OpenAI-compatible APIs: Agents can be called via OpenAI-compatible APIs.

- User registration control: administrators can enable or disable user registration through an environment variable.

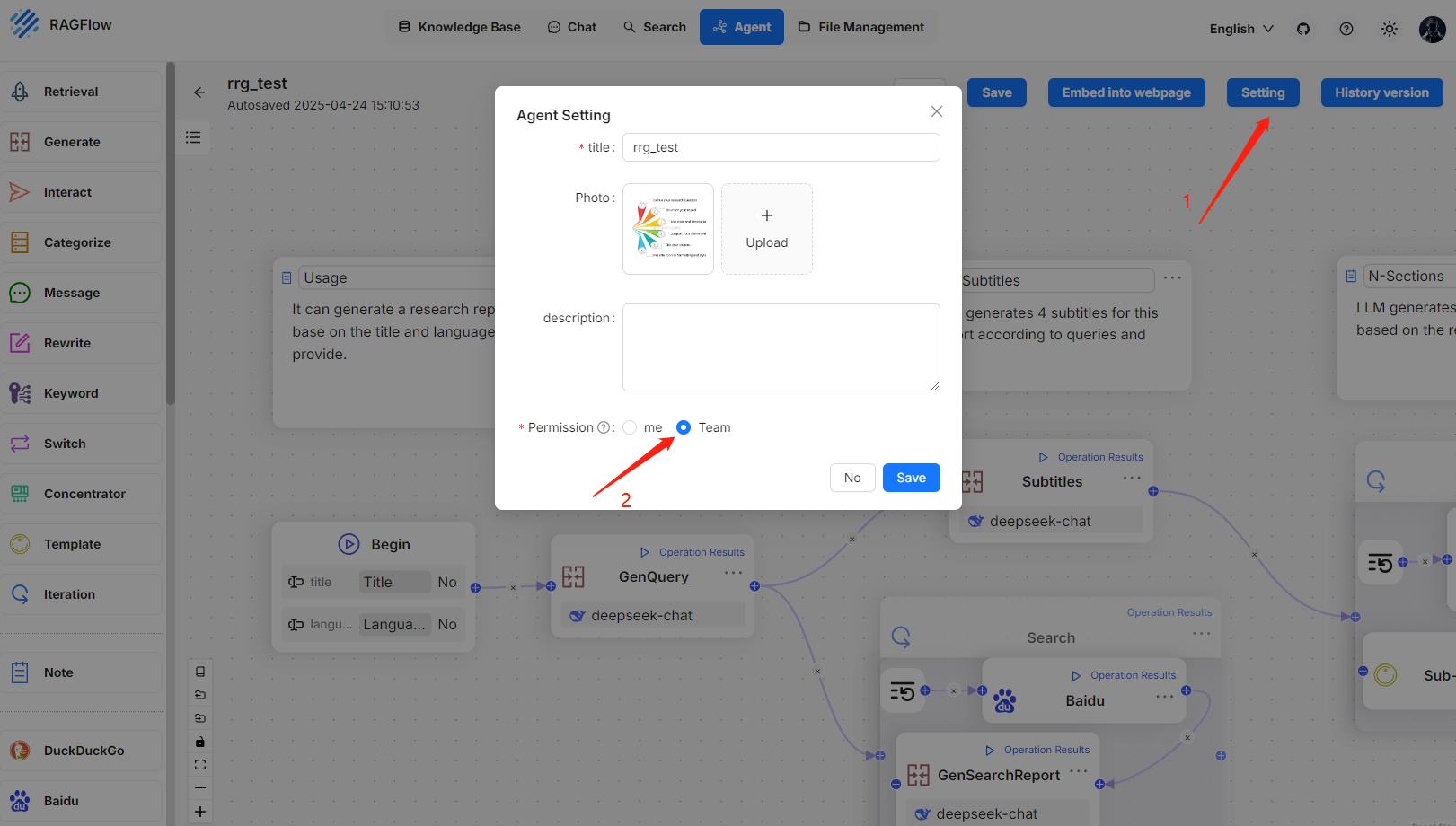

- Team collaboration: Agents can be shared with team members.

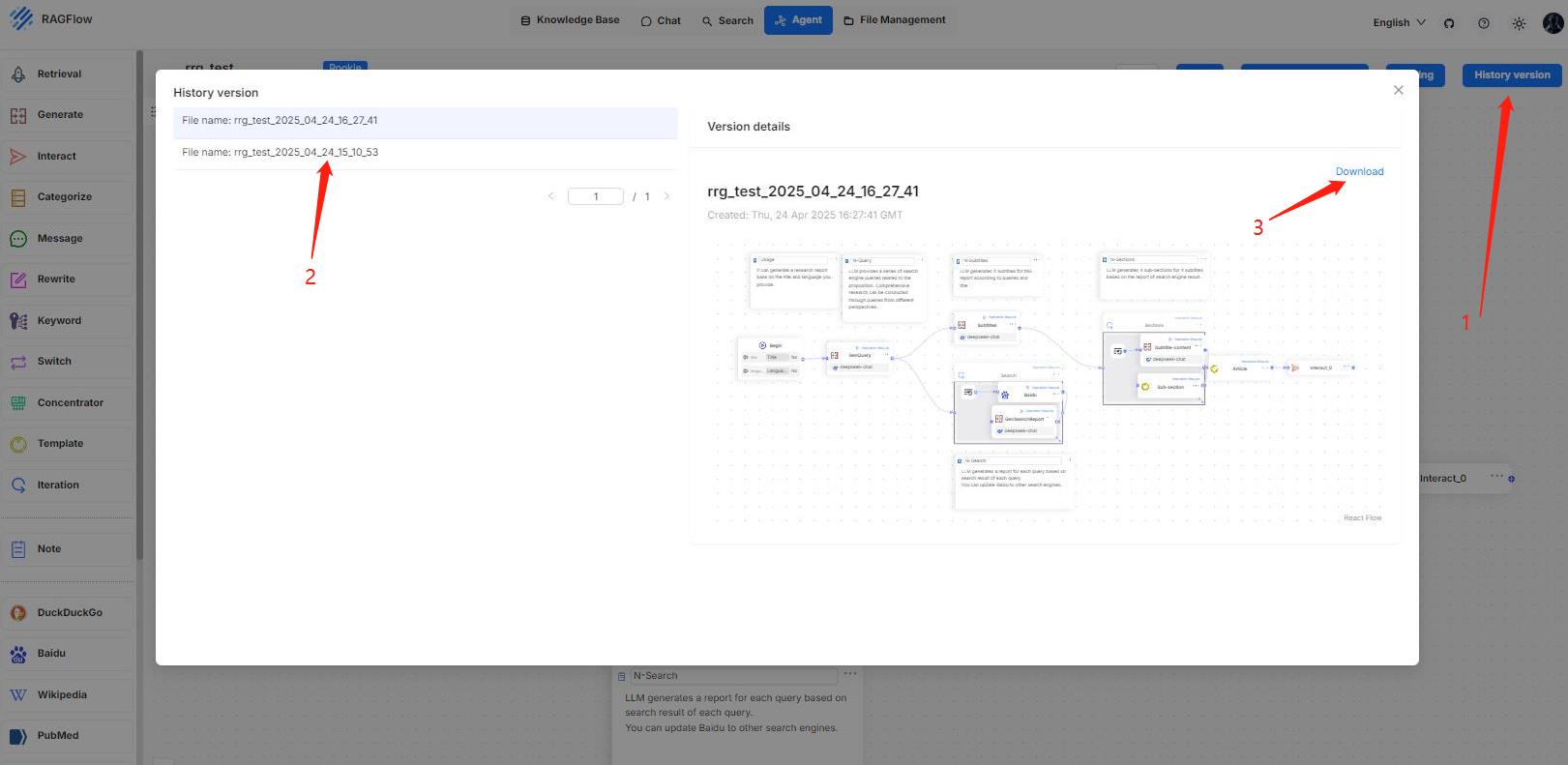

- Agent version control: all updates are continuously logged and can be rolled back to a previous version via export.

### Improvements

- Enhanced answer referencing: Citation accuracy in generated responses is improved.

- Enhanced question-answering experience: users can now manually stop streaming output during a conversation.

### Documentation

#### Added documents

- [Set page rank](./guides/dataset/set_page_rank.md)

- [Enable RAPTOR](./guides/dataset/enable_raptor.md)

- [Set variables for your chat assistant](./guides/chat/set_chat_variables.md)

- [Launch RAGFlow MCP server](./develop/mcp/launch_mcp_server.md)

## v0.17.2

Released on March 13, 2025.

### Compatibility changes

- Removes the **Max_tokens** setting from **Chat configuration**.

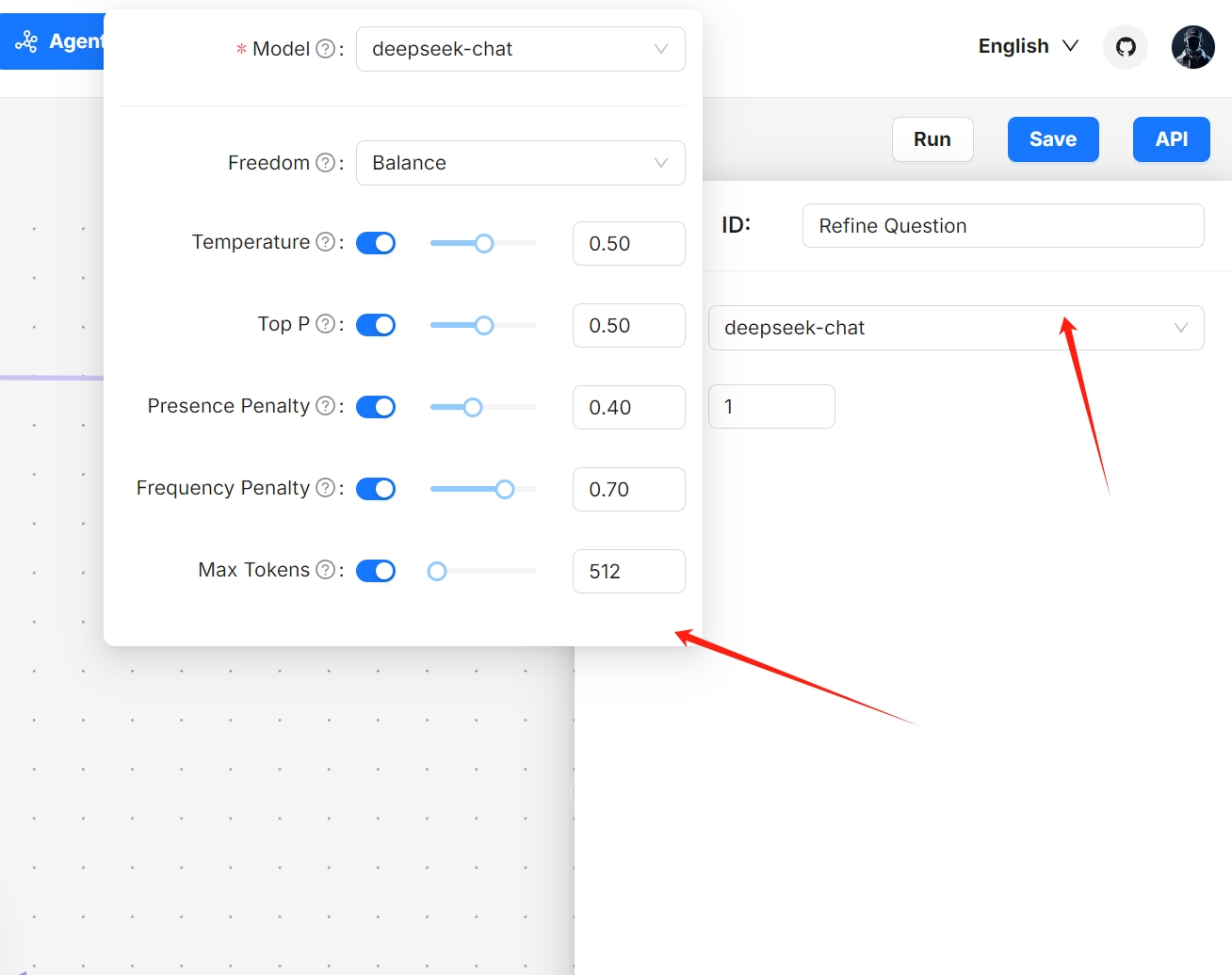

- Removes the **Max_tokens** setting from **Generate**, **Rewrite**, **Categorize**, **Keyword** agent components.

From this release onwards, if you still see RAGFlow's responses being cut short or truncated, check the **Max_tokens** setting of your model provider.

### Improvements

- Adds OpenAI-compatible APIs.

- Introduces a German user interface.

- Accelerates knowledge graph extraction.

- Enables Tavily-based web search in the **Retrieval** agent component.

- Adds Tongyi-Qianwen QwQ models (OpenAI-compatible).

- Supports CSV files in the **General** chunking method.

### Fixed issues

- Unable to add models via Ollama/Xinference, an issue introduced in v0.17.1.

### Related APIs

#### HTTP APIs

- [Create chat completion](./references/http_api_reference.md#openai-compatible-api)

#### Python APIs

- [Create chat completion](./references/python_api_reference.md#openai-compatible-api)

## v0.17.1

Released on March 11, 2025.

### Improvements

- Improves English tokenization quality.

- Improves the table extraction logic in Markdown document parsing.

- Updates SiliconFlow's model list.

- Supports parsing XLS files (Excel 97-2003) with improved corresponding error handling.

- Supports Huggingface rerank models.

- Enables relative time expressions ("now", "yesterday", "last week", "next year", and more) in chat assistant and the **Rewrite** agent component.

### Fixed issues

- A repetitive knowledge graph extraction issue.

- Issues with API calling.

- Options in the **PDF parser**, aka **Document parser**, dropdown are missing.

- A Tavily web search issue.

- Unable to preview diagrams or images in an AI chat.

### Documentation

#### Added documents

- [Use tag set](./guides/dataset/use_tag_sets.md)

## v0.17.0

Released on March 3, 2025.

### New features

- AI chat: Implements Deep Research for agentic reasoning. To activate this, enable the **Reasoning** toggle under the **Prompt engine** tab of your chat assistant dialogue.

- AI chat: Leverages Tavily-based web search to enhance contexts in agentic reasoning. To activate this, enter the correct Tavily API key under the **Assistant settings** tab of your chat assistant dialogue.

- AI chat: Supports starting a chat without specifying knowledge bases.

- AI chat: HTML files can also be previewed and referenced, in addition to PDF files.

- Dataset: Adds a **PDF parser**, aka **Document parser**, dropdown menu to dataset configurations. This includes a DeepDoc model option, which is time-consuming, a much faster **naive** option (plain text), which skips DLA (Document Layout Analysis), OCR (Optical Character Recognition), and TSR (Table Structure Recognition) tasks, and several currently *experimental* large model options.

- Agent component: **(x)** or a forward slash `/` can be used to insert available keys (variables) in the system prompt field of the **Generate** or **Template** component.

- Object storage: Supports using Aliyun OSS (Object Storage Service) as a file storage option.

- Models: Updates the supported model list for Tongyi-Qianwen (Qwen), adding DeepSeek-specific models; adds ModelScope as a model provider.

- APIs: Document metadata can be updated through an API.

The following diagram illustrates the workflow of RAGFlow's Deep Research:

The following is a screenshot of a conversation that integrates Deep Research:

### Related APIs

#### HTTP APIs

Adds a body parameter `"meta_fields"` to the [Update document](./references/http_api_reference.md#update-document) method.

#### Python APIs

Adds a key option `"meta_fields"` to the [Update document](./references/python_api_reference.md#update-document) method.

### Documentation

#### Added documents

- [Run retrieval test](./guides/dataset/run_retrieval_test.md)

## v0.16.0

Released on February 6, 2025.

### New features

- Supports DeepSeek R1 and DeepSeek V3.

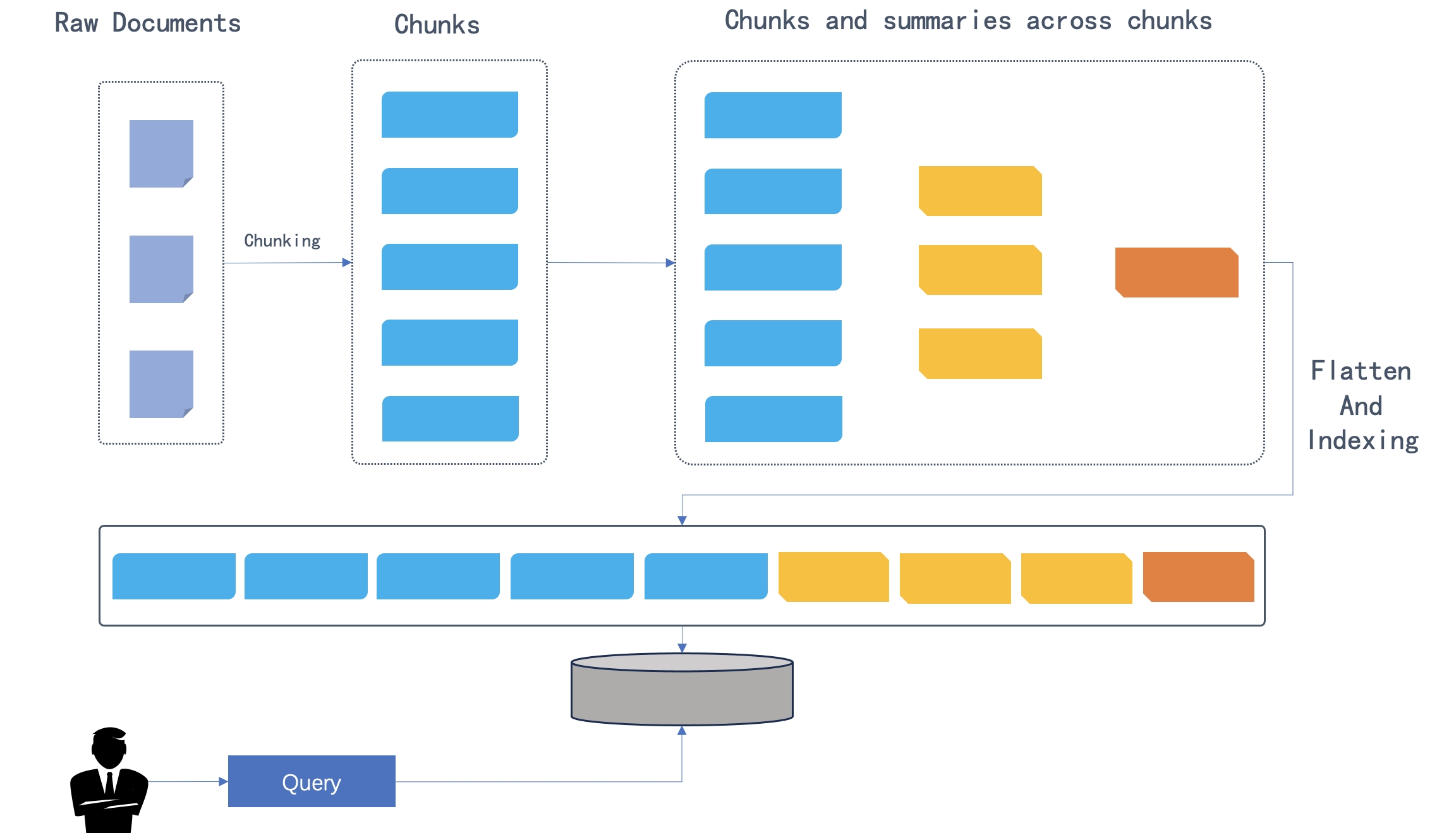

- GraphRAG refactor: Knowledge graph is dynamically built on an entire knowledge base (dataset) rather than on an individual file, and automatically updated when a newly uploaded file starts parsing. See [here](https://ragflow.io/docs/dev/construct_knowledge_graph).

- Adds an **Iteration** agent component and a **Research report generator** agent template. See [here](./guides/agent/agent_component_reference/iteration.mdx).

- New UI language: Portuguese.

- Allows setting metadata for a specific file in a knowledge base to enhance AI-powered chats. See [here](./guides/dataset/set_metadata.md).

- Upgrades RAGFlow's document engine [Infinity](https://github.com/infiniflow/infinity) to v0.6.0.dev3.

- Supports GPU acceleration for DeepDoc (see [docker-compose-gpu.yml](https://github.com/infiniflow/ragflow/blob/main/docker/docker-compose-gpu.yml)).

- Supports creating and referencing a **Tag** knowledge base as a key milestone towards bridging the semantic gap between query and response.

:::danger IMPORTANT

The **Tag knowledge base** feature is *unavailable* on the [Infinity](https://github.com/infiniflow/infinity) document engine.

:::

### Documentation

#### Added documents

- [Construct knowledge graph](./guides/dataset/construct_knowledge_graph.md)

- [Set metadata](./guides/dataset/set_metadata.md)

- [Begin component](./guides/agent/agent_component_reference/begin.mdx)

- [Generate component](./guides/agent/agent_component_reference/generate.mdx)

- [Interact component](./guides/agent/agent_component_reference/interact.mdx)

- [Retrieval component](./guides/agent/agent_component_reference/retrieval.mdx)

- [Categorize component](./guides/agent/agent_component_reference/categorize.mdx)

- [Keyword component](./guides/agent/agent_component_reference/keyword.mdx)

- [Message component](./guides/agent/agent_component_reference/message.mdx)

- [Rewrite component](./guides/agent/agent_component_reference/rewrite.mdx)

- [Switch component](./guides/agent/agent_component_reference/switch.mdx)

- [Concentrator component](./guides/agent/agent_component_reference/concentrator.mdx)

- [Template component](./guides/agent/agent_component_reference/template.mdx)

- [Iteration component](./guides/agent/agent_component_reference/iteration.mdx)

- [Note component](./guides/agent/agent_component_reference/note.mdx)

## v0.15.1

Released on December 25, 2024.

### Upgrades

- Upgrades RAGFlow's document engine [Infinity](https://github.com/infiniflow/infinity) to v0.5.2.

- Enhances the log display of document parsing status.

### Fixed issues

This release fixes the following issues:

- The `SCORE not found` and `position_int` errors returned by [Infinity](https://github.com/infiniflow/infinity).

- Once an embedding model in a specific knowledge base is changed, embedding models in other knowledge bases can no longer be changed.

- Slow response in question-answering and AI search due to repetitive loading of the embedding model.

- Fails to parse documents with RAPTOR.

- Using the **Table** parsing method results in information loss.

- Miscellaneous API issues.

### Related APIs

#### HTTP APIs

Adds an optional parameter `"user_id"` to the following APIs:

- [Create session with chat assistant](https://ragflow.io/docs/dev/http_api_reference#create-session-with-chat-assistant)

- [Update chat assistant's session](https://ragflow.io/docs/dev/http_api_reference#update-chat-assistants-session)

- [List chat assistant's sessions](https://ragflow.io/docs/dev/http_api_reference#list-chat-assistants-sessions)

- [Create session with agent](https://ragflow.io/docs/dev/http_api_reference#create-session-with-agent)

- [Converse with chat assistant](https://ragflow.io/docs/dev/http_api_reference#converse-with-chat-assistant)

- [Converse with agent](https://ragflow.io/docs/dev/http_api_reference#converse-with-agent)

- [List agent sessions](https://ragflow.io/docs/dev/http_api_reference#list-agent-sessions)

## v0.15.0

Released on December 18, 2024.

### New features

- Introduces additional Agent-specific APIs.

- Supports using page rank score to improve retrieval performance when searching across multiple knowledge bases.

- Offers an iframe in Chat and Agent to facilitate the integration of RAGFlow into your webpage.

- Adds a Helm chart for deploying RAGFlow on Kubernetes.

- Supports importing or exporting an agent in JSON format.

- Supports step run for Agent components/tools.

- Adds a new UI language: Japanese.

- Supports resuming GraphRAG and RAPTOR from a failure, enhancing task management resilience.

- Adds more Mistral models.

- Adds a dark mode to the UI, allowing users to toggle between light and dark themes.

### Improvements

- Upgrades the Document Layout Analysis model in DeepDoc.

- Significantly enhances the retrieval performance when using [Infinity](https://github.com/infiniflow/infinity) as document engine.

### Related APIs

#### HTTP APIs

- [List agent sessions](https://ragflow.io/docs/dev/http_api_reference#list-agent-sessions)

- [List agents](https://ragflow.io/docs/dev/http_api_reference#list-agents)

#### Python APIs

- [List agent sessions](https://ragflow.io/docs/dev/python_api_reference#list-agent-sessions)

- [List agents](https://ragflow.io/docs/dev/python_api_reference#list-agents)

## v0.14.1

Released on November 29, 2024.

### Improvements

Adds [Infinity's configuration file](https://github.com/infiniflow/ragflow/blob/main/docker/infinity_conf.toml) to facilitate integration and customization of [Infinity](https://github.com/infiniflow/infinity) as a document engine. From this release onwards, updates to Infinity's configuration can be made directly within RAGFlow and will take effect immediately after restarting RAGFlow using `docker compose`. [#3715](https://github.com/infiniflow/ragflow/pull/3715)

### Fixed issues

This release fixes the following issues:

- Unable to display or edit content of a chunk after clicking it.

- A `'Not found'` error in Elasticsearch.

- Chinese text becoming garbled during parsing.

- A compatibility issue with Polars.

- A compatibility issue between Infinity and GraphRAG.

## v0.14.0

Released on November 26, 2024.

### New features

- Supports [Infinity](https://github.com/infiniflow/infinity) or Elasticsearch (default) as document engine for vector storage and full-text indexing. [#2894](https://github.com/infiniflow/ragflow/pull/2894)

- Enhances user experience by adding more variables to the Agent and implementing auto-saving.

- Adds a three-step translation agent template, inspired by [Andrew Ng's translation agent](https://github.com/andrewyng/translation-agent).

- Adds an SEO-optimized blog writing agent template.

- Provides HTTP and Python APIs for conversing with an agent.

- Supports the use of English synonyms during retrieval processes.

- Optimizes term weight calculations, reducing the retrieval time by 50%.

- Improves task executor monitoring with additional performance indicators.

- Replaces Redis with Valkey.

- Adds three new UI languages (*contributed by the community*): Indonesian, Spanish, and Vietnamese.

### Compatibility changes

From this release onwards, **service_config.yaml.template** replaces **service_config.yaml** for configuring backend services. Upon Docker container startup, the environment variables defined in this template file are automatically populated and a **service_config.yaml** is auto-generated from it. [#3341](https://github.com/infiniflow/ragflow/pull/3341)

This approach eliminates the need to manually update **service_config.yaml** after making changes to **.env**, facilitating dynamic environment configurations.

:::danger IMPORTANT

Ensure that you [upgrade **both** your code **and** Docker image to this release](https://ragflow.io/docs/dev/upgrade_ragflow#upgrade-ragflow-to-the-most-recent-officially-published-release) before trying this new approach.

:::

### Related APIs

#### HTTP APIs

- [Create session with agent](https://ragflow.io/docs/dev/http_api_reference#create-session-with-agent)

- [Converse with agent](https://ragflow.io/docs/dev/http_api_reference#converse-with-agent)

#### Python APIs

- [Create session with agent](https://ragflow.io/docs/dev/python_api_reference#create-session-with-agent)

- [Converse with agent](https://ragflow.io/docs/dev/python_api_reference#create-session-with-agent)

### Documentation

#### Added documents

- [Configurations](https://ragflow.io/docs/dev/configurations)

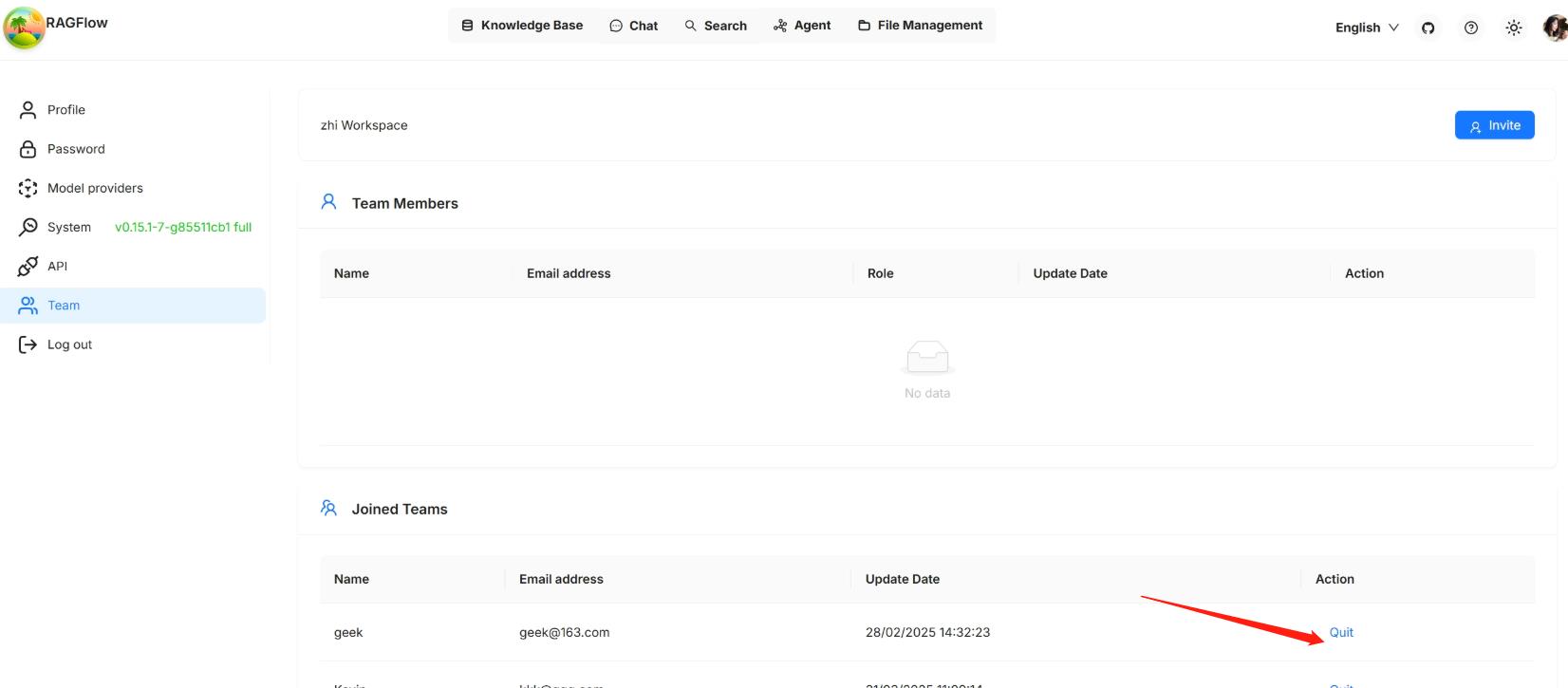

- [Manage team members](./guides/team/manage_team_members.md)

- [Run health check on RAGFlow's dependencies](https://ragflow.io/docs/dev/run_health_check)

## v0.13.0

Released on October 31, 2024.

### New features

- Adds the team management functionality for all users.

- Updates the Agent UI to improve usability.

- Adds support for Markdown chunking in the **General** chunking method.

- Introduces an **invoke** tool within the Agent UI.

- Integrates support for Dify's knowledge base API.

- Adds support for GLM4-9B and Yi-Lightning models.

- Introduces HTTP and Python APIs for dataset management, file management within dataset, and chat assistant management.

:::tip NOTE

To download RAGFlow's Python SDK:

```bash

pip install ragflow-sdk==0.13.0

```

:::

### Documentation

#### Added documents

- [Acquire a RAGFlow API key](./develop/acquire_ragflow_api_key.md)

- [HTTP API Reference](./references/http_api_reference.md)

- [Python API Reference](./references/python_api_reference.md)

## v0.12.0

Released on September 30, 2024.

### New features

- Offers slim editions of RAGFlow's Docker images, which do not include built-in BGE/BCE embedding or reranking models.

- Improves the results of multi-round dialogues.

- Enables users to remove added LLM vendors.

- Adds support for **OpenTTS** and **SparkTTS** models.

- Implements an **Excel to HTML** toggle in the **General** chunking method, allowing users to parse a spreadsheet into either HTML tables or key-value pairs by row.

- Adds agent tools **YahooFinance** and **Jin10**.

- Adds an investment advisor agent template.

### Compatibility changes

From this release onwards, RAGFlow offers slim editions of its Docker images to improve the experience for users with limited Internet access. A slim edition of RAGFlow's Docker image does not include built-in BGE/BCE embedding models and has a size of about 1GB; a full edition of RAGFlow is approximately 9GB and includes both built-in embedding models and embedding models that will be downloaded once you select them in the RAGFlow UI.

The default Docker image edition is `nightly-slim`. The following list clarifies the differences between various editions:

- `nightly-slim`: The slim edition of the most recent tested Docker image.

- `v0.12.0-slim`: The slim edition of the most recent **officially released** Docker image.

- `nightly`: The full edition of the most recent tested Docker image.

- `v0.12.0`: The full edition of the most recent **officially released** Docker image.

See [Upgrade RAGFlow](https://ragflow.io/docs/dev/upgrade_ragflow) for instructions on upgrading.

### Documentation

#### Added documents

- [Upgrade RAGFlow](https://ragflow.io/docs/dev/upgrade_ragflow)

## v0.11.0

Released on September 14, 2024.

### New features

- Introduces an AI search interface within the RAGFlow UI.

- Supports audio output via **FishAudio** or **Tongyi Qwen TTS**.

- Allows the use of Postgres for metadata storage, in addition to MySQL.

- Supports object storage options with S3 or Azure Blob.

- Supports model vendors: **Anthropic**, **Voyage AI**, and **Google Cloud**.

- Supports the use of **Tencent Cloud ASR** for audio content recognition.

- Adds finance-specific agent components: **WenCai**, **AkShare**, **YahooFinance**, and **TuShare**.

- Adds a medical consultant agent template.

- Supports running retrieval benchmarking on the following datasets:

- [ms_marco_v1.1](https://huggingface.co/datasets/microsoft/ms_marco)

- [trivia_qa](https://huggingface.co/datasets/mandarjoshi/trivia_qa)

- [miracl](https://huggingface.co/datasets/miracl/miracl)

## v0.10.0

Released on August 26, 2024.

### New features

- Introduces a text-to-SQL template in the Agent UI.

- Implements Agent APIs.

- Incorporates monitoring for the task executor.

- Introduces Agent tools **GitHub**, **DeepL**, **BaiduFanyi**, **QWeather**, and **GoogleScholar**.

- Supports chunking of EML files.

- Supports more LLMs or model services: **GPT-4o-mini**, **PerfXCloud**, **TogetherAI**, **Upstage**, **Novita AI**, **01.AI**, **SiliconFlow**, **PPIO**, **XunFei Spark**, **Baidu Yiyan**, and **Tencent Hunyuan**.

## v0.9.0

Released on August 6, 2024.

### New features

- Supports GraphRAG as a chunking method.

- Introduces Agent component **Keyword** and search tools, including **Baidu**, **DuckDuckGo**, **PubMed**, **Wikipedia**, **Bing**, and **Google**.

- Supports speech-to-text recognition for audio files.

- Supports model vendors **Gemini** and **Groq**.

- Supports inference frameworks, engines, and services including **LM studio**, **OpenRouter**, **LocalAI**, and **Nvidia API**.

- Supports using reranker models in Xinference.

## v0.8.0

Released on July 8, 2024.

### New features

- Supports Agentic RAG, enabling graph-based workflow construction for RAG and agents.

- Supports model vendors **Mistral**, **MiniMax**, **Bedrock**, and **Azure OpenAI**.

- Supports DOCX files in the MANUAL chunking method.

- Supports DOCX, MD, and PDF files in the Q&A chunking method.

## v0.7.0

Released on May 31, 2024.

### New features

- Supports the use of reranker models.

- Integrates reranker and embedding models: [BCE](https://github.com/netease-youdao/BCEmbedding), [BGE](https://github.com/FlagOpen/FlagEmbedding), and [Jina](https://jina.ai/embeddings/).

- Supports LLMs Baichuan and VolcanoArk.

- Implements [RAPTOR](https://arxiv.org/html/2401.18059v1) for improved text retrieval.

- Supports HTML files in the GENERAL chunking method.

- Provides HTTP and Python APIs for deleting documents by ID.

- Supports ARM64 platforms.

:::danger IMPORTANT

While we also test RAGFlow on ARM64 platforms, we do not maintain RAGFlow Docker images for ARM.

If you are on an ARM platform, follow [this guide](./develop/build_docker_image.mdx) to build a RAGFlow Docker image.

:::

### Related APIs

#### HTTP API

- [Delete documents](https://ragflow.io/docs/dev/http_api_reference#delete-documents)

#### Python API

- [Delete documents](https://ragflow.io/docs/dev/python_api_reference#delete-documents)

## v0.6.0

Released on May 21, 2024.

### New features

- Supports streaming output.

- Provides HTTP and Python APIs for retrieving document chunks.

- Supports monitoring of system components, including Elasticsearch, MySQL, Redis, and MinIO.

- Supports disabling **Layout Recognition** in the GENERAL chunking method to reduce file chunking time.

### Related APIs

#### HTTP API

- [Retrieve chunks](https://ragflow.io/docs/dev/http_api_reference#retrieve-chunks)

#### Python API

- [Retrieve chunks](https://ragflow.io/docs/dev/python_api_reference#retrieve-chunks)

## v0.5.0

Released on May 8, 2024.

### New features

- Supports LLM DeepSeek.

develop_category_.json

{

"label": "Developers",

"position": 4,

"link": {

"type": "generated-index",

"description": "Guides for hardcore developers"

}

}

develop\acquire_ragflow_api_key.md

---

sidebar_position: 3

slug: /acquire_ragflow_api_key

---

# Acquire RAGFlow API key

An API key is required for the RAGFlow server to authenticate your HTTP/Python or MCP requests. This documents provides instructions on obtaining a RAGFlow API key.

1. Click your avatar in the top right corner of the RAGFlow UI to access the configuration page.

2. Click **API** to switch to the **API** page.

3. Obtain a RAGFlow API key:

:::tip NOTE

See the [RAGFlow HTTP API reference](../references/http_api_reference.md) or the [RAGFlow Python API reference](../references/python_api_reference.md) for a complete reference of RAGFlow's HTTP or Python APIs.

:::

develop\launch_ragflow_from_source.md

---

sidebar_position: 2

slug: /launch_ragflow_from_source

---

# Launch service from source

A guide explaining how to set up a RAGFlow service from its source code. By following this guide, you'll be able to debug using the source code.

## Target audience

Developers who have added new features or modified existing code and wish to debug using the source code, *provided that* their machine has the target deployment environment set up.

## Prerequisites

- CPU ≥ 4 cores

- RAM ≥ 16 GB

- Disk ≥ 50 GB

- Docker ≥ 24.0.0 & Docker Compose ≥ v2.26.1

:::tip NOTE

If you have not installed Docker on your local machine (Windows, Mac, or Linux), see the [Install Docker Engine](https://docs.docker.com/engine/install/) guide.

:::

## Launch a service from source

To launch a RAGFlow service from source code:

### Clone the RAGFlow repository

```bash

git clone https://github.com/infiniflow/ragflow.git

cd ragflow/

```

### Install Python dependencies

1. Install uv:

```bash

pipx install uv

```

2. Install Python dependencies:

- slim:

```bash

uv sync --python 3.10 # install RAGFlow dependent python modules

```

- full:

```bash

uv sync --python 3.10 --all-extras # install RAGFlow dependent python modules

```

*A virtual environment named `.venv` is created, and all Python dependencies are installed into the new environment.*

### Launch third-party services

The following command launches the 'base' services (MinIO, Elasticsearch, Redis, and MySQL) using Docker Compose:

```bash

docker compose -f docker/docker-compose-base.yml up -d

```

### Update `host` and `port` Settings for Third-party Services

1. Add the following line to `/etc/hosts` to resolve all hosts specified in **docker/service_conf.yaml.template** to `127.0.0.1`:

```

127.0.0.1 es01 infinity mysql minio redis

```

2. In **docker/service_conf.yaml.template**, update mysql port to `5455` and es port to `1200`, as specified in **docker/.env**.

### Launch the RAGFlow backend service

1. Comment out the `nginx` line in **docker/entrypoint.sh**.

```

# /usr/sbin/nginx

```

2. Activate the Python virtual environment:

```bash

source .venv/bin/activate

export PYTHONPATH=$(pwd)

```

3. **Optional:** If you cannot access HuggingFace, set the HF_ENDPOINT environment variable to use a mirror site:

```bash

export HF_ENDPOINT=https://hf-mirror.com

```

4. Check the configuration in **conf/service_conf.yaml**, ensuring all hosts and ports are correctly set.

5. Run the **entrypoint.sh** script to launch the backend service:

```shell

JEMALLOC_PATH=$(pkg-config --variable=libdir jemalloc)/libjemalloc.so;

LD_PRELOAD=$JEMALLOC_PATH python rag/svr/task_executor.py 1;

```

```shell

python api/ragflow_server.py;

```

### Launch the RAGFlow frontend service

1. Navigate to the `web` directory and install the frontend dependencies:

```bash

cd web

npm install

```

2. Update `proxy.target` in **.umirc.ts** to `http://127.0.0.1:9380`:

```bash

vim .umirc.ts

```

3. Start up the RAGFlow frontend service:

```bash

npm run dev

```

*The following message appears, showing the IP address and port number of your frontend service:*

### Access the RAGFlow service

In your web browser, enter `http://127.0.0.1:<PORT>/`, ensuring the port number matches that shown in the screenshot above.

### Stop the RAGFlow service when the development is done

1. Stop the RAGFlow frontend service:

```bash

pkill npm

```

2. Stop the RAGFlow backend service:

```bash

pkill -f "docker/entrypoint.sh"

```

develop\mcp_category_.json

{

"label": "MCP",

"position": 4,

"link": {

"type": "generated-index",

"description": "Guides and references on accessing RAGFlow's knowledge bases via MCP."

}

}

develop\mcp\mcp_client_example.md

---

sidebar_position: 3

slug: /mcp_client

---

# RAGFlow MCP client example

We provide a *prototype* MCP client example for testing [here](https://github.com/infiniflow/ragflow/blob/main/mcp/client/client.py).

:::danger IMPORTANT

If your MCP server is running in host mode, include your acquired API key in your client's `headers` as shown below:

```python

async with sse_client("http://localhost:9382/sse", headers={"api_key": "YOUR_KEY_HERE"}) as streams:

# Rest of your code...

```

:::

develop\mcp\mcp_tools.md

---

sidebar_position: 2

slug: /mcp_tools

---

# RAGFlow MCP tools

The MCP server currently offers a specialized tool to assist users in searching for relevant information powered by RAGFlow DeepDoc technology:

- **retrieve**: Fetches relevant chunks from specified `dataset_ids` and optional `document_ids` using the RAGFlow retrieve interface, based on a given question. Details of all available datasets, namely, `id` and `description`, are provided within the tool description for each individual dataset.

For more information, see our Python implementation of the [MCP server](https://github.com/infiniflow/ragflow/blob/main/mcp/server/server.py).

guides_category_.json

{

"label": "Guides",

"position": 3,

"link": {

"type": "generated-index",

"description": "Guides for RAGFlow users and developers."

}

}

guides\manage_files.md

---

sidebar_position: 6

slug: /manage_files

---

# Files

Knowledge base, hallucination-free chat, and file management are the three pillars of RAGFlow. RAGFlow's file management allows you to upload files individually or in bulk. You can then link an uploaded file to multiple target knowledge bases. This guide showcases some basic usages of the file management feature.

:::info IMPORTANT

Compared to uploading files directly to various knowledge bases, uploading them to RAGFlow's file management and then linking them to different knowledge bases is *not* an unnecessary step, particularly when you want to delete some parsed files or an entire knowledge base but retain the original files.

:::

## Create folder

RAGFlow's file management allows you to establish your file system with nested folder structures. To create a folder in the root directory of RAGFlow:

:::caution NOTE

Each knowledge base in RAGFlow has a corresponding folder under the **root/.knowledgebase** directory. You are not allowed to create a subfolder within it.

:::

## Upload file

RAGFlow's file management supports file uploads from your local machine, allowing both individual and bulk uploads:

## Preview file

RAGFlow's file management supports previewing files in the following formats:

- Documents (PDF, DOCS)

- Tables (XLSX)

- Pictures (JPEG, JPG, PNG, TIF, GIF)

## Link file to knowledge bases

RAGFlow's file management allows you to *link* an uploaded file to multiple knowledge bases, creating a file reference in each target knowledge base. Therefore, deleting a file in your file management will AUTOMATICALLY REMOVE all related file references across the knowledge bases.

You can link your file to one knowledge base or multiple knowledge bases at one time:

## Move file to a specific folder

## Search files or folders

**File Management** only supports file name and folder name filtering in the current directory (files or folders in the child directory will not be retrieved).

## Rename file or folder

RAGFlow's file management allows you to rename a file or folder:

## Delete files or folders

RAGFlow's file management allows you to delete files or folders individually or in bulk.

To delete a file or folder:

To bulk delete files or folders:

> - You are not allowed to delete the **root/.knowledgebase** folder.

> - Deleting files that have been linked to knowledge bases will **AUTOMATICALLY REMOVE** all associated file references across the knowledge bases.

## Download uploaded file

RAGFlow's file management allows you to download an uploaded file:

> As of RAGFlow v0.18.0, bulk download is not supported, nor can you download an entire folder.

guides\run_health_check.md

---

sidebar_position: 8

slug: /run_health_check

---

# Monitoring

Double-check the health status of RAGFlow's dependencies.

---

The operation of RAGFlow depends on four services:

- **Elasticsearch** (default) or [Infinity](https://github.com/infiniflow/infinity) as the document engine

- **MySQL**

- **Redis**

- **MinIO** for object storage

If an exception or error occurs related to any of the above services, such as `Exception: Can't connect to ES cluster`, refer to this document to check their health status.

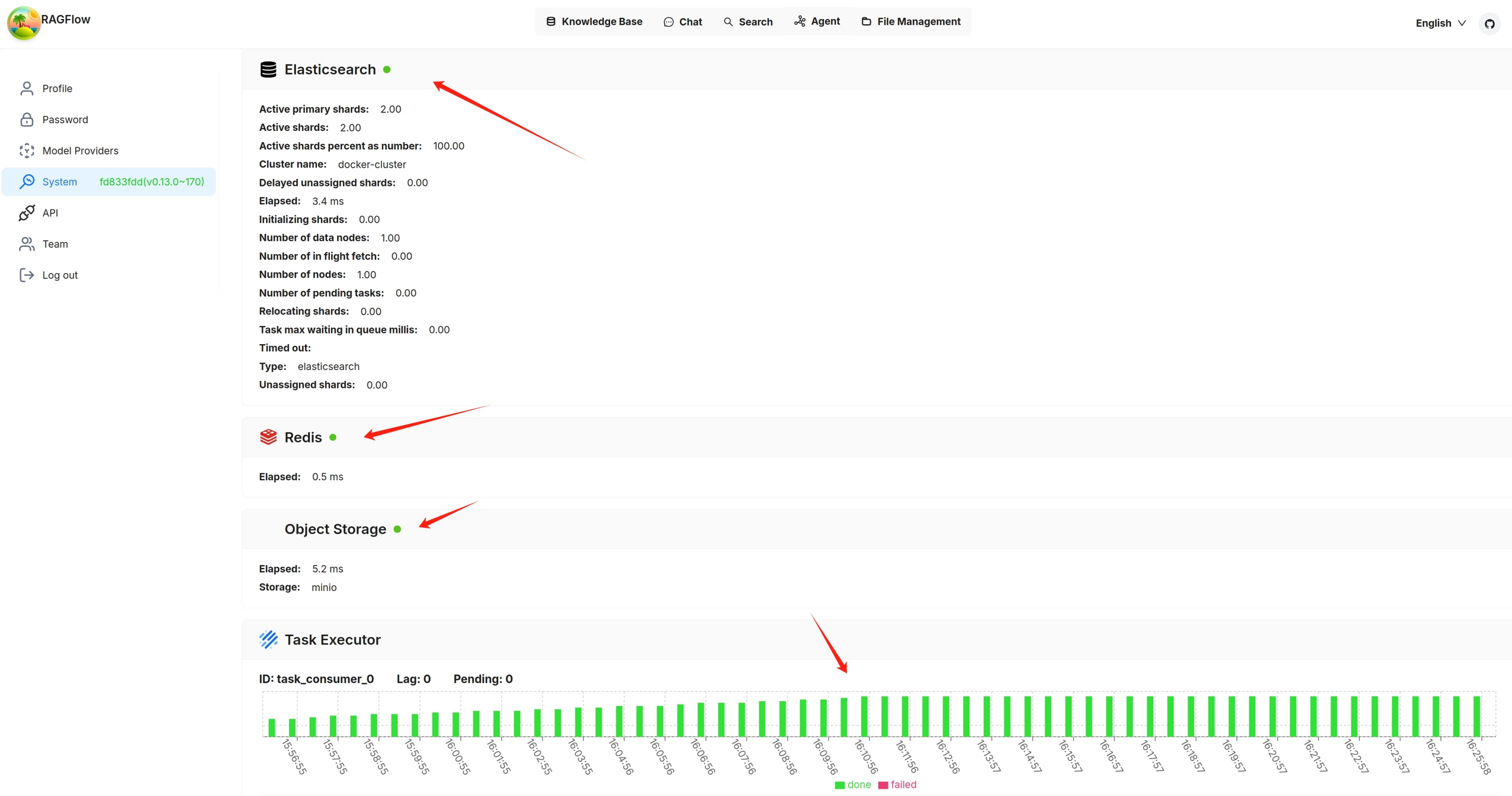

You can also click you avatar in the top right corner of the page **>** System to view the visualized health status of RAGFlow's core services. The following screenshot shows that all services are 'green' (running healthily). The task executor displays the *cumulative* number of completed and failed document parsing tasks from the past 30 minutes:

Services with a yellow or red light are not running properly. The following is a screenshot of the system page after running `docker stop ragflow-es-10`:

You can click on a specific 30-second time interval to view the details of completed and failed tasks:

guides\upgrade_ragflow.mdx

---

sidebar_position: 11

slug: /upgrade_ragflow

---

# Upgrading

import Tabs from '@theme/Tabs';

import TabItem from '@theme/TabItem';

Upgrade RAGFlow to `nightly-slim`/`nightly` or the latest, published release.

:::info NOTE

Upgrading RAGFlow in itself will *not* remove your uploaded/historical data. However, be aware that `docker compose -f docker/docker-compose.yml down -v` will remove Docker container volumes, resulting in data loss.

:::

## Upgrade RAGFlow to `nightly-slim`/`nightly`, the most recent, tested Docker image

`nightly-slim` refers to the RAGFlow Docker image *without* embedding models, while `nightly` refers to the RAGFlow Docker image with embedding models. For details on their differences, see [ragflow/docker/.env](https://github.com/infiniflow/ragflow/blob/main/docker/.env).

To upgrade RAGFlow, you must upgrade **both** your code **and** your Docker image:

1. Clone the repo

```bash

git clone https://github.com/infiniflow/ragflow.git

```

2. Update **ragflow/docker/.env**:

<Tabs

defaultValue="nightly-slim"

values={[

{label: 'nightly-slim', value: 'nightly-slim'},

{label: 'nightly', value: 'nightly'},

]}>

<TabItem value="nightly-slim">

```bash

RAGFLOW_IMAGE=infiniflow/ragflow:nightly-slim

```

</TabItem>

<TabItem value="nightly">

```bash

RAGFLOW_IMAGE=infiniflow/ragflow:nightly

```

</TabItem>

</Tabs>

3. Update RAGFlow image and restart RAGFlow:

```bash

docker compose -f docker/docker-compose.yml pull

docker compose -f docker/docker-compose.yml up -d

```

## Upgrade RAGFlow to the most recent, officially published release

To upgrade RAGFlow, you must upgrade **both** your code **and** your Docker image:

1. Clone the repo

```bash

git clone https://github.com/infiniflow/ragflow.git

```

2. Switch to the latest, officially published release, e.g., `v0.18.0`:

```bash

git checkout -f v0.18.0

```

3. Update **ragflow/docker/.env** as follows:

```bash

RAGFLOW_IMAGE=infiniflow/ragflow:v0.18.0

```

4. Update the RAGFlow image and restart RAGFlow:

```bash

docker compose -f docker/docker-compose.yml pull

docker compose -f docker/docker-compose.yml up -d

```

## Frequently asked questions

### Upgrade RAGFlow in an offline environment (without Internet access)

1. From an environment with Internet access, pull the required Docker image.

2. Save the Docker image to a **.tar** file.

```bash

docker save -o ragflow.v0.18.0.tar infiniflow/ragflow:v0.18.0

```

3. Copy the **.tar** file to the target server.

4. Load the **.tar** file into Docker:

```bash

docker load -i ragflow.v0.18.0.tar

```

guides\agent_category_.json

{

"label": "Agents",

"position": 3,

"link": {

"type": "generated-index",

"description": "RAGFlow v0.8.0 introduces an agent mechanism, featuring a no-code workflow editor on the front end and a comprehensive graph-based task orchestration framework on the backend."

}

}

guides\agent\agent_introduction.md

---

sidebar_position: 1

slug: /agent_introduction

---

# Introduction to agents

Key concepts, basic operations, a quick view of the agent editor.

---

## Key concepts

Agents and RAG are complementary techniques, each enhancing the other’s capabilities in business applications. RAGFlow v0.8.0 introduces an agent mechanism, featuring a no-code workflow editor on the front end and a comprehensive graph-based task orchestration framework on the back end. This mechanism is built on top of RAGFlow's existing RAG solutions and aims to orchestrate search technologies such as query intent classification, conversation leading, and query rewriting to:

- Provide higher retrievals and,

- Accommodate more complex scenarios.

## Create an agent

:::tip NOTE

Before proceeding, ensure that:

1. You have properly set the LLM to use. See the guides on [Configure your API key](../models/llm_api_key_setup.md) or [Deploy a local LLM](../models/deploy_local_llm.mdx) for more information.

2. You have a knowledge base configured and the corresponding files properly parsed. See the guide on [Configure a knowledge base](../dataset/configure_knowledge_base.md) for more information.

:::

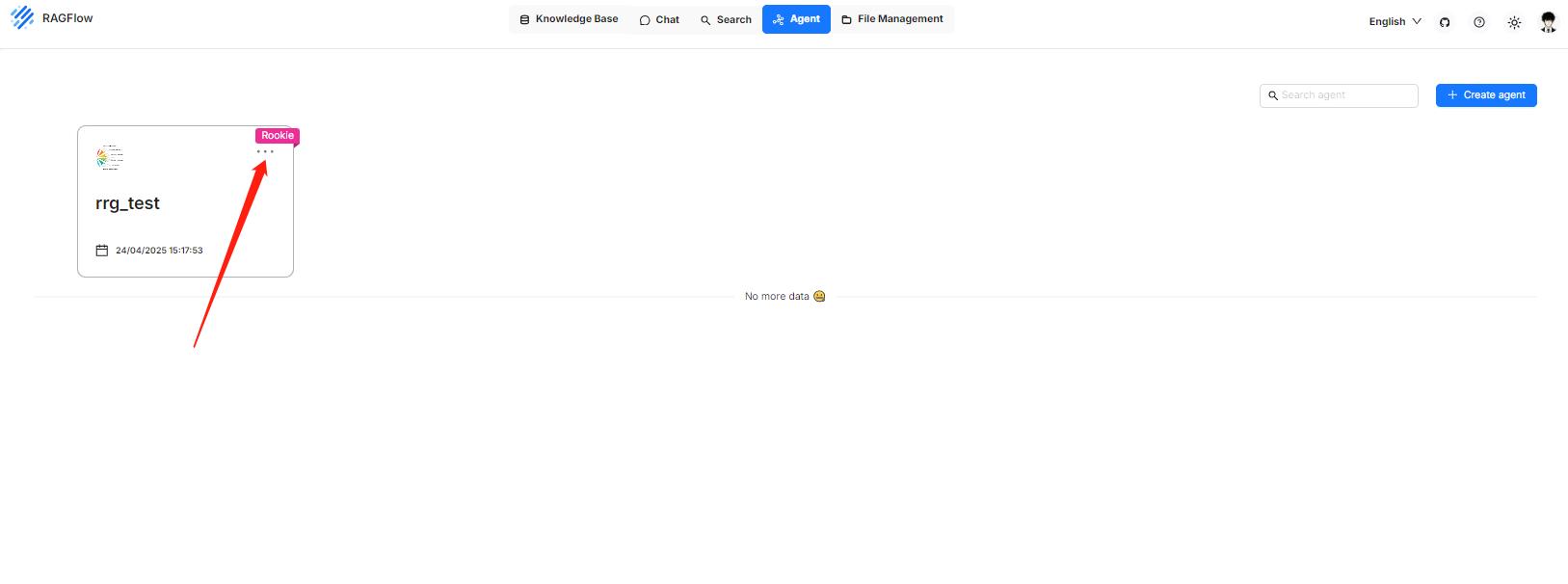

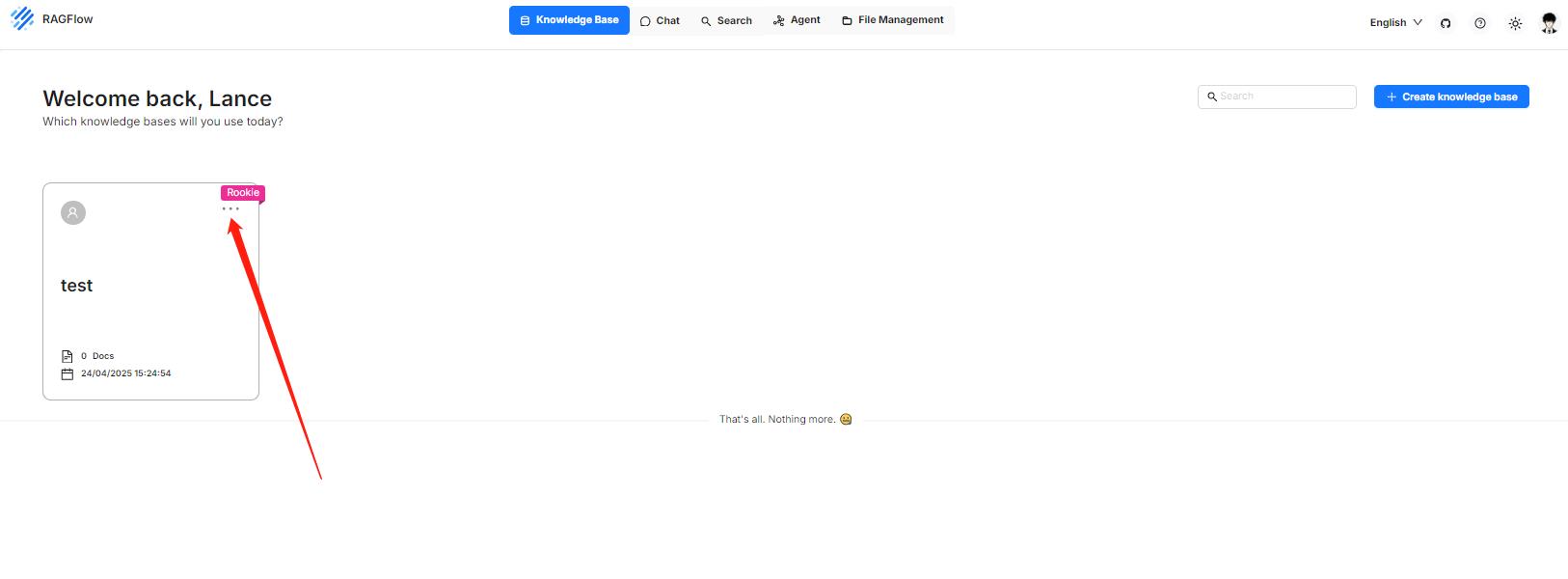

Click the **Agent** tab in the middle top of the page to show the **Agent** page. As shown in the screenshot below, the cards on this page represent the created agents, which you can continue to edit.

We also provide templates catered to different business scenarios. You can either generate your agent from one of our agent templates or create one from scratch:

1. Click **+ Create agent** to show the **agent template** page:

2. To create an agent from scratch, click the **Blank** card. Alternatively, to create an agent from one of our templates, hover over the desired card, such as **General-purpose chatbot**, click **Use this template**, name your agent in the pop-up dialogue, and click **OK** to confirm.

*You are now taken to the **no-code workflow editor** page. The left panel lists the components (operators): Above the dividing line are the RAG-specific components; below the line are tools. We are still working to expand the component list.*

3. General speaking, now you can do the following:

- Drag and drop a desired component to your workflow,

- Select the knowledge base to use,

- Update settings of specific components,

- Update LLM settings

- Sets the input and output for a specific component, and more.

4. Click **Save** to apply changes to your agent and **Run** to test it.

## Components

Please review the flowing description of the RAG-specific components before you proceed:

| Component | Description |

|----------------|----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|

| **Retrieval** | A component that retrieves information from specified knowledge bases and returns 'Empty response' if no information is found. Ensure the correct knowledge bases are selected. |

| **Generate** | A component that prompts the LLM to generate responses. You must ensure the prompt is set correctly. |

| **Interact** | A component that serves as the interface between human and the bot, receiving user inputs and displaying the agent's responses. |

| **Categorize** | A component that uses the LLM to classify user inputs into predefined categories. Ensure you specify the name, description, and examples for each category, along with the corresponding next component. |

| **Message** | A component that sends out a static message. If multiple messages are supplied, it randomly selects one to send. Ensure its downstream is **Interact**, the interface component. |

| **Rewrite** | A component that rewrites a user query from the **Interact** component, based on the context of previous dialogues. |

| **Keyword** | A component that extracts keywords from a user query, with TopN specifying the number of keywords to extract. |

:::caution NOTE

- Ensure **Rewrite**'s upstream component is **Relevant** and downstream component is **Retrieval**.

- Ensure the downstream component of **Message** is **Interact**.

- The downstream component of **Begin** is always **Interact**.

:::

## Basic operations

| Operation | Description |

|---------------------------|------------------------------------------------------------------------------------------------------------------------------------------|

| Add a component | Drag and drop the desired component from the left panel onto the canvas. |

| Delete a component | On the canvas, hover over the three dots (...) of the component to display the delete option, then select it to remove the component. |

| Copy a component | On the canvas, hover over the three dots (...) of the component to display the copy option, then select it to make a copy the component. |

| Update component settings | On the canvas, click the desired component to display the component settings. |

guides\agent\embed_agent_into_webpage.md

---

sidebar_position: 3

slug: /embed_agent_into_webpage

---

# Embed agent into webpage

You can use iframe to embed an agent into a third-party webpage.

:::caution WARNING

If your agent's **Begin** component takes a variable, you *cannot* embed it into a webpage.

:::

1. Before proceeding, you must [acquire an API key](../models/llm_api_key_setup.md); otherwise, an error message would appear.

2. On the **Agent** page, click an intended agent **>** **Edit** to access its editing page.

3. Click **Embed into webpage** on the top right corner of the canvas to show the **iframe** window:

4. Copy the iframe and embed it into a specific location on your webpage.

guides\agent\general_purpose_chatbot.md

---

sidebar_position: 2

slug: /general_purpose_chatbot

---

# Create chatbot

Create a general-purpose chatbot.

---

Chatbot is one of the most common AI scenarios. However, effectively understanding user queries and responding appropriately remains a challenge. RAGFlow's general-purpose chatbot agent is our attempt to tackle this longstanding issue.

This chatbot closely resembles the chatbot introduced in [Start an AI chat](../chat/start_chat.md), but with a key difference - it introduces a reflective mechanism that allows it to improve the retrieval from the target knowledge bases by rewriting the user's query.

This document provides guides on creating such a chatbot using our chatbot template.

## Prerequisites

1. Ensure you have properly set the LLM to use. See the guides on [Configure your API key](../models/llm_api_key_setup.md) or [Deploy a local LLM](../models/deploy_local_llm.mdx) for more information.

2. Ensure you have a knowledge base configured and the corresponding files properly parsed. See the guide on [Configure a knowledge base](../dataset/configure_knowledge_base.md) for more information.

3. Make sure you have read the [Introduction to Agentic RAG](./agent_introduction.md).

## Create a chatbot agent from template

To create a general-purpose chatbot agent using our template:

1. Click the **Agent** tab in the middle top of the page to show the **Agent** page.

2. Click **+ Create agent** on the top right of the page to show the **agent template** page.

3. On the **agent template** page, hover over the card on **General-purpose chatbot** and click **Use this template**.

*You are now directed to the **no-code workflow editor** page.*

:::tip NOTE

RAGFlow's no-code editor spares you the trouble of coding, making agent development effortless.

:::

## Understand each component in the template

Here’s a breakdown of each component and its role and requirements in the chatbot template:

- **Begin**

- Function: Sets an opening greeting for users.

- Purpose: Establishes a welcoming atmosphere and prepares the user for interaction.

- **Interact**

- Function: Serves as the interface between human and the bot.

- Role: Acts as the downstream component of **Begin**.

- **Retrieval**

- Function: Retrieves information from specified knowledge base(s).

- Requirement: Must have `knowledgebases` set up to function.

- **Relevant**

- Function: Assesses the relevance of the retrieved information from the **Retrieval** component to the user query.

- Process:

- If relevant, it directs the data to the **Generate** component for final response generation.

- Otherwise, it triggers the **Rewrite** component to refine the user query and redo the retrival process.

- **Generate**

- Function: Prompts the LLM to generate responses based on the retrieved information.

- Note: The prompt settings allow you to control the way in which the LLM generates responses. Be sure to review the prompts and make necessary changes.

- **Rewrite**:

- Function: Refines a user query when no relevant information from the knowledge base is retrieved.

- Usage: Often used in conjunction with **Relevant** and **Retrieval** to create a reflective/feedback loop.

## Configure your chatbot agent

1. Click **Begin** to set an opening greeting:

2. Click **Retrieval** to select the right knowledge base(s) and make any necessary adjustments:

3. Click **Generate** to configure the LLM's summarization behavior:

3.1. Confirm the model.

3.2. Review the prompt settings. If there are variables, ensure they match the correct component IDs:

4. Click **Relevant** to review or change its settings:

*You may retain the current settings, but feel free to experiment with changes to understand how the agent operates.*

5. Click **Rewrite** to select a different model for query rewriting or update the maximum loop times for query rewriting:

:::danger NOTE

Increasing the maximum loop times may significantly extend the time required to receive the final response.

:::

1. Update your workflow where you see necessary.

2. Click to **Save** to apply your changes.

*Your agent appears as one of the agent cards on the **Agent** page.*

## Test your chatbot agent

1. Find your chatbot agent on the **Agent** page:

2. Experiment with your questions to verify if this chatbot functions as intended:

guides\agent\agent_component_reference_category_.json

{

"label": "Agent Components",

"position": 20,

"link": {

"type": "generated-index",

"description": "A complete reference for RAGFlow's agent components."

}

}

guides\agent\agent_component_reference\begin.mdx

---

sidebar_position: 1

slug: /begin_component

---

# Begin component

The starting component in a workflow.

---

The **Begin** component sets an opening greeting or accepts inputs from the user. It is automatically populated onto the canvas when you create an agent, whether from a template or from scratch (from a blank template). There should be only one **Begin** component in the workflow.

## Scenarios

A **Begin** component is essential in all cases. Every agent includes a **Begin** component, which cannot be deleted.

## Configurations

Click the component to display its **Configuration** window. Here, you can set an opening greeting and the input parameters (global variables) for the agent.

### ID

The ID is the unique identifier for the component within the workflow. Unlike the IDs of other components, the ID of the **Begin** component *cannot* be changed.

### Opening greeting

An opening greeting is the agent's first message to the user. It can be a welcoming remark or an instruction to guide the user forward.

### Global variables

You can set global variables within the **Begin** component, which can be either required or optional. Once established, users will need to provide values for these variables when interacting or chatting with the agent. Click **+ Add variable** to add a global variable, each with the following attributes:

- **Key**: *Required*

The unique variable name.

- **Name**: *Required*

A descriptive name providing additional details about the variable.

For example, if **Key** is set to `lang`, you can set its **Name** to `Target language`.

- **Type**: *Required*

The type of the variable:

- **line**: Accepts a single line of text without line breaks.

- **paragraph**: Accepts multiple lines of text, including line breaks.

- **options**: Requires the user to select a value for this variable from a dropdown menu. And you are required to set *at least* one option for the dropdown menu.

- **file**: Requires the user to upload one or multiple files.

- **integer**: Accepts an integer as input.

- **boolean**: Requires the user to toggle between on and off.

- **Optional**: A toggle indicating whether the variable is optional.

:::tip NOTE

To pass in parameters from a client, call:

- HTTP method [Converse with agent](../../../references/http_api_reference.md#converse-with-agent), or

- Python method [Converse with agent](../../../references/python_api_reference.md#converse-with-agent).

:::

:::danger IMPORTANT

- If you set the key type as **file**, ensure the token count of the uploaded file does not exceed your model provider's maximum token limit; otherwise, the plain text in your file will be truncated and incomplete.

- If your agent's **Begin** component takes a variable, you *cannot* embed it into a webpage.

:::

## Examples

As mentioned earlier, the **Begin** component is indispensable for an agent. Still, you can take a look at our three-step interpreter agent template, where the **Begin** component takes two global variables:

1. Click the **Agent** tab at the top center of the page to access the **Agent** page.

2. Click **+ Create agent** on the top right of the page to open the **agent template** page.

3. On the **agent template** page, hover over the **Interpreter** card and click **Use this template**.